Weekly #61: The GPU vs TPU Debate Is a Distraction

Portfolio +33.1% YTD, 2.3x the market since inception. Why the real AI bet isn’t the chip, but the infrastructure behind it.

Hello fellow Sharks,

This week the portfolio gained some ground and widened the gap versus the S&P 500. If you want to skip straight to the numbers, jump to the Portfolio Update.

Celestica traded above $350 per share earlier this month. Since then, the stock has pulled back and now trades about 17% below that recent high.

I’ve seen a few questions pop up about what changed.

Short answer: not much.

So I want to use this week’s Thought of the Week to zoom out and frame Celestica the right way. The debate right now is all about GPUs versus TPUs. Nvidia versus custom silicon. Who wins the AI race.

I think that framing misses the point.

Celestica is not a bet on which chip architecture wins. It’s a bet on the AI build-out itself. No matter which technology dominates, the hardware still needs to be built, integrated, cooled, powered, and shipped. That’s where Celestica sits.

That’s what I want to unpack this week.

Enjoy the read, and have a great Sunday!

~George

Table of Contents:

In Case You Missed It

I sent a Trade Alert last week on an energy stock that the market still treats like yesterday’s news, even though the fundamentals look anything but sleepy.

This ties straight back to what I laid out in Weekly #58: energy still sits as the cheapest sector in the S&P 500, and I wanted to finally put that mispricing to work.

The idea lives in an unloved corner of the oil and gas world, throws off real cash, and trades at a discount to what similar assets go for. I also like the setup because it has clear levers that can close that gap without needing heroic oil prices.

Thought of the Week: GPUs vs TPUs: Two Paths in the AI Race

I’ve been thinking about how tech often splits into rival formats.

Remember the VHS vs Betamax war?

Or the one between Blu-ray and HD DVD?

Back then we would argue over which video format would win. Today, people talk about GPUs vs TPUs like they’re a similar rivalry.

But it’s actually different.

Instead of fighting over one prize, these chips serve different parts of the AI world. GPUs and TPUs complement each other rather than just one winning and the other losing.

GPUs and TPUs are both hardware chips for computing, but they’re built for different tasks in the AI lifecycle. GPUs (Graphics Processing Units) started out in gaming cards and now train and run AI models. TPUs (Tensor Processing Units) are custom chips made by Google just for AI math. Think of a GPU like a multi-tool gadget (a Swiss Army knife) that can do lots of different things. A TPU is more like a precision tool (a scalpel) tuned for one job: running neural-network math really fast. Each has its strengths and weaknesses in training (building models) and inference (using models to answer queries).

What Are GPUs and TPUs?

GPUs were originally made to speed up graphics and video games (I still remember when I bought my first graphics card to play Counterstrike in my university dorm against my roommates), but they also handle heavy AI workloads.

In simple terms, a GPU is a chip with thousands of small processors (CUDA cores, in the case of NVIDIA) working in parallel. It breaks big tasks into many little ones that run at the same time. For example, to train a neural network, a GPU can do thousands of calculations on different parts of a matrix (a grid of numbers) all at once. That’s why GPUs excel at machine learning training: they can process huge datasets with many math ops side by side.

By contrast, a TPU is a chip Google designed specifically for AI. A TPU is not a jack-of-all-trades; it’s built to excel at tensor math, which is basically matrix multiplication and addition. Google’s blog explains that a TPU contains 65,536 tiny 8-bit multipliers, whereas a cloud GPU might only have a few thousand 32-bit multipliers. This massive array of mini-multipliers (called a systolic array) lets the TPU do an enormous amount of math each clock cycle. The trade-off is that TPUs are less flexible than GPUs. A GPU can handle graphics, video, simulations, or AI. A TPU is laser-focused on neural network math.

When it comes to building these chips, both use advanced manufacturing but focus on different tech. GPUs (like NVIDIA’s Blackwell series) pack high-end floating-point units and large memory on a chip, often cooled with fans or liquid. TPUs (Google’s Ironwood generation) pack very high-bandwidth memory and liquid cooling into a rack. TPUs have 192 GB of high-bandwidth memory (HBM) on a single chip vs. 80 GB on top GPUs, to keep up with the data flow.

Essentially, TPUs are engineered to feed their math cores data as fast as possible and stay cool while doing it.

Cost is handled differently too. High-end GPUs are sold to many companies and hobbyists; a single A100 or H100 GPU can cost on the order of ten thousand dollars. You can buy them from multiple vendors (NVIDIA, AMD, etc.). TPUs aren’t sold as stand-alone chips in the same way. You normally access a TPU through Google’s cloud (paying an hourly rate of a few dollars) or as part of Google’s internal infrastructure. So GPUs are more flexible to own and deploy in different clouds and servers, while TPUs are tightly tied to Google’s ecosystem.

Training vs Inference: Where They Shine

I like to split the AI pipeline into two big stages: training (teaching a model) and inference (using the model to make predictions). GPUs and TPUs both handle each stage, but one often has the edge in each.

Training

Traditionally, GPUs have been the workhorses of training deep neural nets. They’re programmable and broad, letting researchers try new ideas in frameworks like PyTorch. A GPU’s thousands of cores are ideal for the linear algebra in backpropagation.

In practice, most universities and many companies train models on GPUs. However, Google has moved to train its largest models (like Gemini) on its custom TPUs. For example, Google’s Gemini 3 model was trained entirely on 7th-gen TPUs (“Ironwood”), linking tens of thousands of chips together. Google finds that for huge models at its scale, TPUs deliver faster results.

Inference

After a model is trained, it’s used to answer questions or make decisions: this is inference. Here, energy and latency matter a lot. TPUs are especially strong in inference. Newer TPU generations were designed specifically for inference at scale, giving very low latency and high throughput.

Google built TPUs to answer queries lightning-fast and efficiently. GPUs can also do inference and are widely used (especially with optimizations like TensorRT), but they often consume more power and need more tuning.

A good analogy is water flow: GPUs are wide pipes that carry a lot of water, useful for many scenarios, whereas TPUs are turbo-charged pumps that deliver huge pressure to push data through very quickly. In the real world, many companies do a mix: they might train models on GPUs or TPUs, and then pick the hardware that gives the fastest, cheapest inference.

Key differences

GPUs have very mature software (CUDA, PyTorch, TensorFlow) and can run all sorts of AI models. TPUs fit best into Google’s stack (TensorFlow, JAX, XLA).

GPUs are moderate in energy efficiency, TPUs aim for higher performance-per-watt on AI tasks. Google says Ironwood TPUs have about 2x the performance-per-watt of the previous generation. So if electricity cost is important (like in a giant data center), TPUs have a real advantage.

No doubt, there are trade-offs: GPUs can handle any computation you throw at them (graphics, physics, blockchain, etc.), whereas TPUs are not meant for general math. But for neural network math (tensors), TPUs can outperform GPUs per watt.

Celestica’s Place in the AI Hardware Chain

Now, let’s talk about Celestica (CLS 0.00%↑). If you’ve been around this newsletter for a while, you already know how bullish I am on it. CLS is my largest portfolio position, and that’s not by accident.

Celestica is a Canadian electronics manufacturer that builds complex hardware systems. If you’ve ever wondered who actually assembles the servers and racks that power AI data centers, the answer is often companies like Celestica. They don’t design the chips. NVIDIA and Google do that. Celestica handles everything around them. Chip packaging. Circuit board assembly. Integration of GPU cards or TPU boards. Full rack build-outs.

If you want the full background on why I see CLS as one of the most important picks in the AI stack, you can read my original deep dive here:

For example, CLS handles system-level board and rack integration, assembly, and testing delivering the ‘last mile’ of Google’s AI server deployments. In other words, Celestica literally builds the AI servers and racks that Google uses. CLS went from a traditional contract manufacturer to Google’s primary builder of liquid-cooled racks, high-voltage power architectures, and next-generation switching systems. That means Celestica now builds things like custom liquid-cooling frames (needed by TPUs) and top-of-rack switches.

What about GPUs? Well, Celestica also works with other cloud clients that use GPU-based systems. Microsoft (MSFT 0.00%↑), Amazon (AMZN 0.00%↑), Meta (META 0.00%↑), etc., all build AI servers in collaboration with hardware partners.

We know Microsoft (Azure) is a Celestica customer for certain data center hardware, so CLS indirectly participates in NVIDIA GPU farms too. Even if Celestica isn’t putting the NVIDIA chips on the board, it assembles the boards, racks, and networking that tie those GPUs together.

In fact, the CEO of Celestica mentioned that every hyperscaler that ran 400G networking upgrades (for GPU clusters) has now given Celestica 800G switch projects. In short, whether a client is running GPUs or TPUs, Celestica’s name is likely on the hardware somewhere.

My own channel checks in Asia tie Celestica to Google’s TPU efforts. Google is building TPU-driven data centers around the world, and Celestica is a key partner in this build-out. Celestica has quietly become crucial to Google’s TPU infrastructure. For example, Gemini 3 (Google’s latest AI model) was trained on Google’s 7th-gen TPUs built in custom racks, racks that Celestica helps construct.

Meanwhile, any boost to AI spending helps Celestica. When news broke that Meta is in talks to use Google’s TPU technology, Celestica’s stock jumped.

Similarly, announcements about big GPU deployments (like NVIDIA’s multi-gigawatt plan with OpenAI) bode well for Celestica, because those GPUs still need to live in servers that Celestica builds. In essence, Celestica is a play on the demand for AI hardware itself, not on whether GPUs or TPUs are “winning.”

Why the GPU vs TPU Battle Doesn’t Change Celestica’s Game

At first glance, you might think: if everyone starts using TPUs instead of GPUs, won’t NVIDIA and its partners suffer?

Possibly. But for Celestica, it doesn’t matter which chip wins. The key is that both GPU and TPU growth fuel Celestica’s business. All the data centers need to be built, cooled, and connected, no matter the chip brand.

AI compute demand will keep growing no matter what. I think GPUs and TPUs could become complementary, each for different tasks, and compute requirements will only grow regardless of which architecture ends up on top. In other words, it’s not a zero-sum war like VHS vs Betamax. Big cloud providers will use both GPUs and TPUs where they fit best.

For Celestica, that means more orders rather than fewer. Whether Google or Meta or Amazon is buying, they all need hardware. Celestica’s CEO made this point during earnings calls: when hyperscalers budget billions for AI, a slice goes to networking and systems that Celestica builds. Celestica is literally “following the money” in AI capex. Their revenues are booming because every hyperscaler is ramping up GPU clusters or TPU farms.

Celestica is selling shovels to two gold miners racing each other. Whether they use Tesla or Cadillac brand pickaxes (GPUs or TPUs), Celestica’s shovels are the same. The real bet is on the gold rush itself (the AI boom) not on whose pickaxe wins.

Portfolio Update

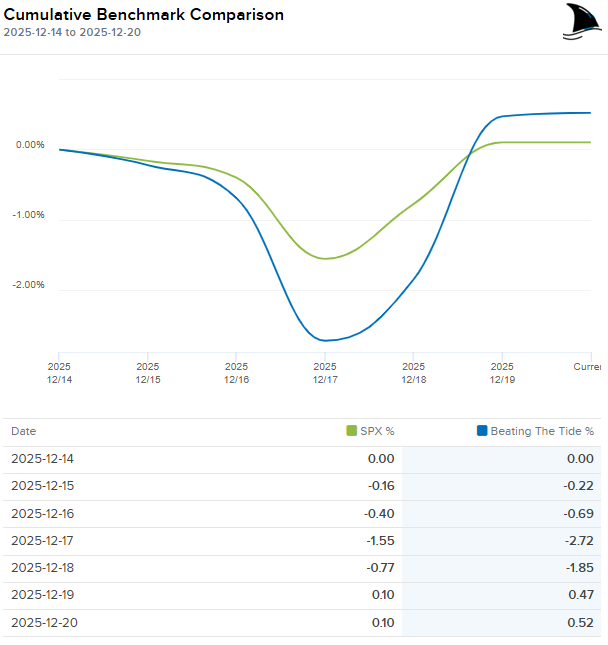

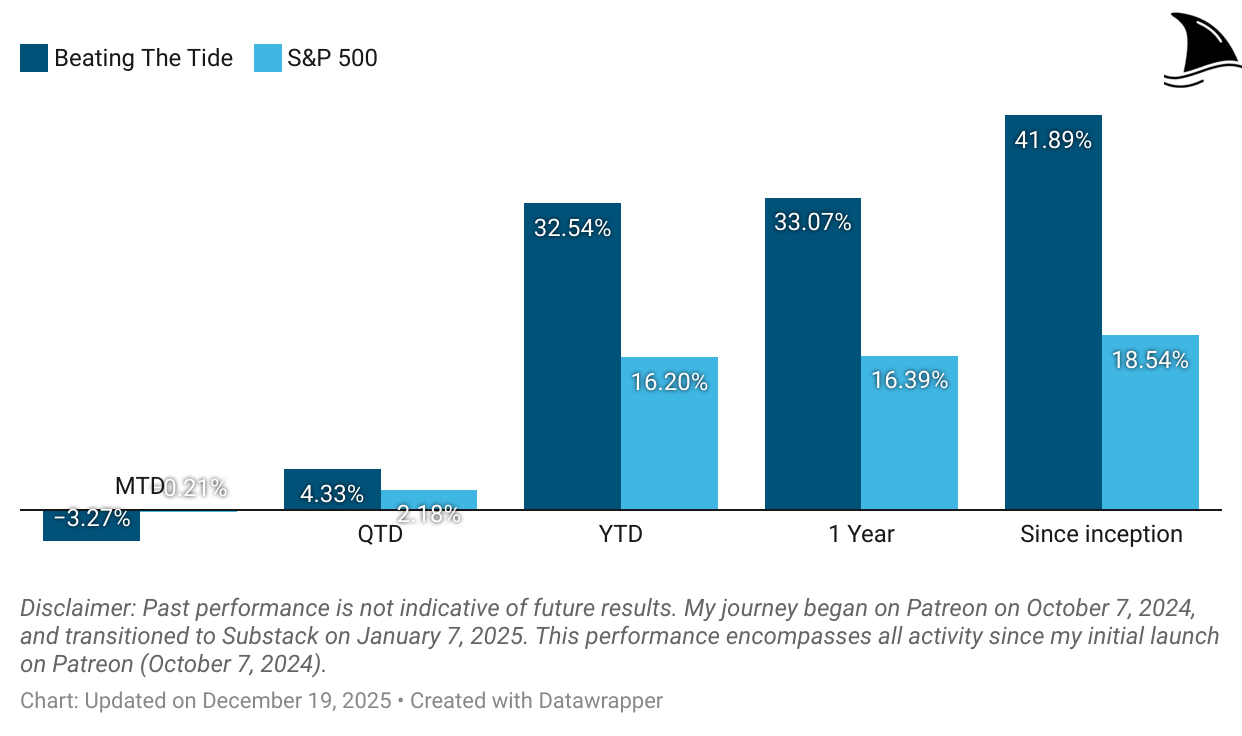

The portfolio ended the week higher than the S&P 500 expanding our out-performance.

Month-to-date: -3.3% vs. the S&P 500’s -0.2%.

Year-to-date: +33.1% vs. the S&P’s +16.4%, a gap of 1,634 basis points.

Since inception: +41.9% vs. the S&P 500’s +18.5%. That’s 2.3x the market.

Portfolio Return

Contribution by Sector

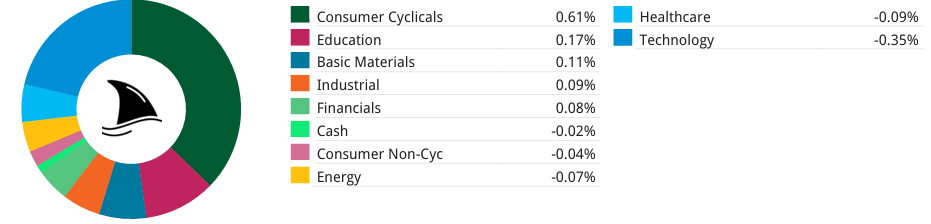

This week consumer cyclicals led the gains partially offset by tech.

Contribution by Position

(For the full breakdown, see Weekly Stock Performance Tracker)

+8 bps AGX 0.00%↑ (Thesis)

+7 bps KINS 0.00%↑ (Thesis)

+6 bps DXPE 0.00%↑ (Thesis)

+2 bps LRN 0.00%↑ (Thesis)

-4 bps POWL 0.00%↑ (Thesis)

-6 bps TSM 0.00%↑ (Thesis)

-6 bps OPFI 0.00%↑ (Thesis)

-8 bps STRL 0.00%↑ (Thesis)

-47 bps CLS 0.00%↑ (TSX: CLS) (Thesis)

That’s it for this week.

Stay calm. Stay focused. And remember to stay sharp, fellow Sharks!

Further Sunday reading to help your investment process:

The pickaxe seller analogy for Celestica is sharper than most commentary on hyperscaler capex divergence. When Microsoft, Google, and Meta all guided $60B+ annual AI spend, the question wasn't GPU vs TPU allocation but who's building the liquid cooling and 800G fabrics. Watched CLS bounce around $350 but the fundametal thesis hasn't budged, it's just benefiting from whoever wins the silicon wars.