Weekly #40: 8 for 8 on EPS Beats + Why Investing Will Be One of the Last Jobs AI Replaces

Portfolio up 13.5% YTD and about 2x the market since inception. 8/8 portfolio companies beat EPS. Plus my take on AI and why investors still have an edge.

Hello fellow Sharks,

Busy week. Q2 earnings season is in full swing, and eight of our holdings have reported since my last update. We went eight for eight on EPS beats. Two prints missed on revenue, while the rest surprised to the upside.

The portfolio is up 13.5% YTD and about two times the market since inception. (If you want to skip ahead to the Portfolio Update, click here.)

I also want to zoom out for a minute. My thought of the week tackles a hot topic: AI and jobs. I use AI every week, but I still believe investing will be one of the last professions that software fully replaces. In the piece, I explain where AI helps, where it hurts, and why judgment still matters in markets.

Enjoy the read!

~ George

Table of Contents:

In Case You Missed It

OPFI Deep Dive: The Fintech Lender That’s Beating the Odds

I published a full deep dive on OppFi on July 23. Since we first bought the stock, we’re up about +51%, and I still see roughly 100% further upside from here. The thesis is simple. OPFI is turning underwriting into a software problem. Its latest AI model, “Model 6,” now approves close to four out of five applications without human intervention, which improves speed, consistency, and unit economics.

The business model helps, too. OPFI partners with banks that originate the loans, while OppFi provides the tech and servicing. That structure keeps the balance sheet lighter, expands reach across states, and lowers operating costs. Credit performance has been trending in the right direction, with Q1 2025 showing solid net revenue growth and healthier charge-off dynamics.

On valuation, the stock still trades around 3.2x free cash flow, which leaves ample room for a rerating as execution continues. My fair value remains about $21 per share, with upside driven by cash generation, operating leverage from AI underwriting, and continued discipline on credit.

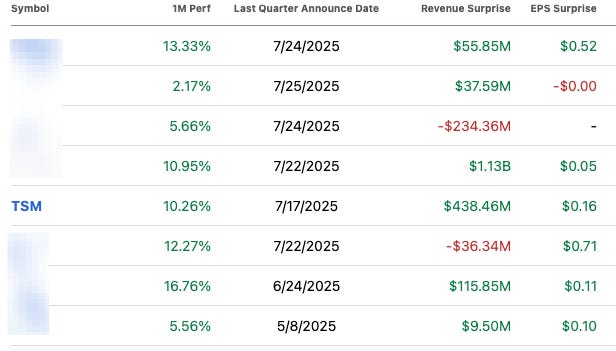

Q2 Earnings Season Update: None Missed EPS Estimates

Roughly one-third of S&P 500 companies have reported, and ~80% have beaten EPS estimates, well above the long-term average beat rate. Blended y/y EPS growth for Q2 is tracking in the mid-single digits (~5.6%–7.0% for the S&P 500; ~8.9% ex-Energy), with revenue growth around ~4%. Net profit margins remain healthy at a blended 12.3% (the fifth straight quarter ≥12%), though FactSet notes that while the percentage of beats is above average, the magnitude of beats is below average.

Since our last earnings update on June 15, eight companies in the portfolio reported. None missed the EPS consensus. Two missed revenue consensus; the rest posted positive revenue surprises. (Example: TSM beat by $0.16 on EPS and by ~$438M on revenue. Read my analysis on TSM’s earnings here.)

Thought Of The Week: Why I Think Investing Will Be One of the Final Jobs Taken Over by AI

I use ChatGPT a lot in my research process, and I know many people do. I also use it to understand my blood test results before my doctor appointment, to parse tax law in different jurisdictions, and to learn new things. It’s a fantastic tool, but like any tool, there’s a right way and a wrong way to use it. Lately, everyone’s buzzing about how AI will take over jobs.

Will AI replace human investors?

This question hit me again while working on my OPFI deep dive. Their latest credit model now approves about 80 percent of loan applications without a human in the loop, and it is doing a solid job. Seeing that in action pushed me to revisit a constant question I have about AI and the future of the profession I chose.

I’m optimistic about AI’s potential, but I suspect investing will be one of the last jobs that AI truly takes over. And to be fair, you should take my view with a grain of salt. I’m biased. When you hold a hammer, everything can look like a nail.

We humans tend to get overly optimistic or overly pessimistic about new tech. Remember the 2000 dot-com bubble. People thought the internet would instantly change everything. They were right about its impact but off on the timing.

I see parallels with today’s AI frenzy. Yes, AI will transform industries. It is already happening in some areas. But perhaps not as fast as the market expects.

In this piece, I will share what has worked for me when using AI, what has not, and why I believe human judgment in investing will endure longer than the hype suggests.

The Right vs. Wrong Way to Use ChatGPT (AI as a Tool)

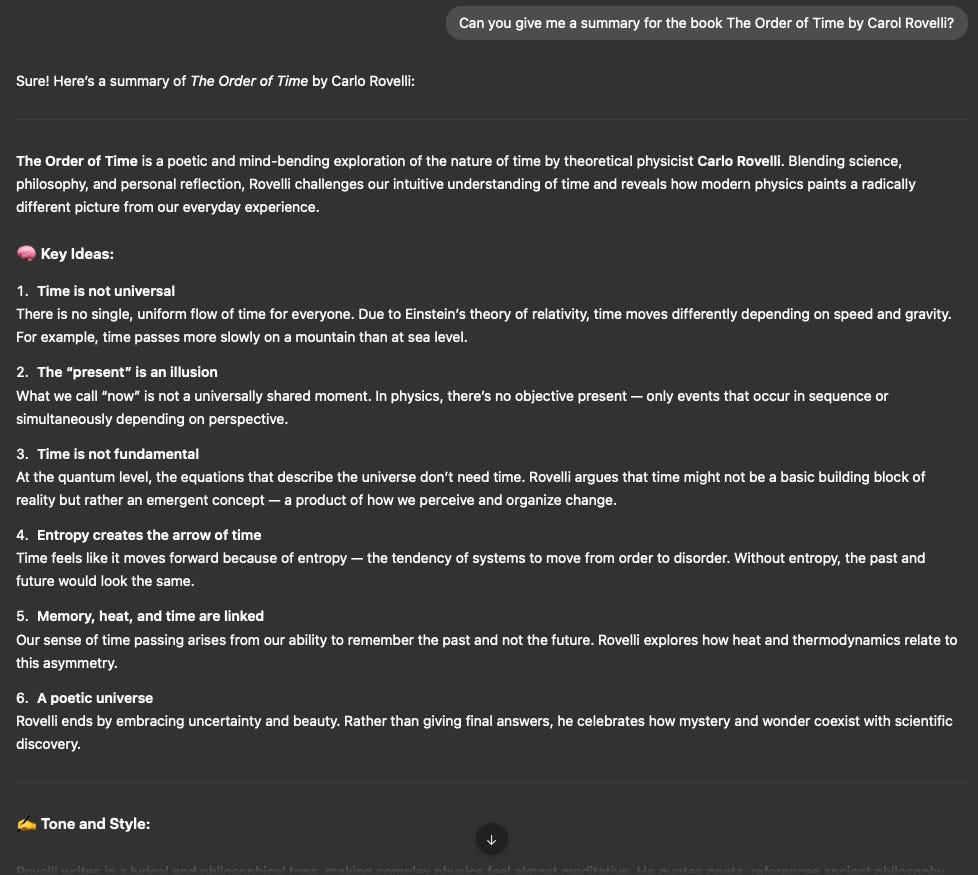

I’ve learned from experience that if we use ChatGPT for everything, we risk losing our edge as thinkers. For example, I’ve just finished the book The Order of Time. That book is a deep, thought-provoking book about the concept of time. Each sentence challenges my beliefs and makes me think.

Sure, I could have asked ChatGPT to summarize the book for me to save time, but that would’ve been a mistake. Actually, let’s ask for the summary.

Yes, it does a good job at summarizing the book. But it didn’t trigger the same “aha!” moments and deep reflections as when I read the book. The friction of wrestling with each idea is what spurs new insights. ChatGPT, by design, eliminates friction; it gives you quick answers on a platter. That seems helpful, but it can short-circuit the thinking process that leads to original ideas.

Losing that friction is bad for two reasons:

First, if an AI just spoon-feeds me answers, I lose the opportunity to think and perhaps come up with a creative insight of my own. Original ideas often come from grappling with tough questions, noticing gaps or “friction” in understanding, and then innovating to bridge them. If I skip that process, I’m just consuming predigested ideas.

Second, if we all rely on AI to do our reading, thinking, and writing for us, who will be left to produce the kinds of intriguing books and articles that make people think? Great literature and insightful analysis from humans who have engaged deeply with material. If we never exercise those mental muscles because an AI did all the heavy lifting, we’ll lose the ability to create anything truly thought-provoking ourselves.

AI is just a tool, not a magic brain replacement.

I like to say Large Language Models (LLMs) are like knives: you can use a knife to carve a beautiful sculpture or to prepare a meal that feeds the hungry, or you can hurt someone. The tool itself isn’t “good” or “evil”; it depends on the user. ChatGPT is the same. There’s a good way and a bad way to use it:

Bad Uses of ChatGPT:

Using it as a shortcut for everything. For instance, telling it “Write me an investment thesis for Apple” and expecting a brilliant, original stock pick. I guarantee the output will be generic and 100% useless as serious investment advice. It will sound like a mash-up of every common opinion on Apple that’s already out there. Nothing new, no insight.

Another bad use is having it summarize rich, complex content (like that book on time) instead of engaging with the material yourself. You get the gist, but miss the nuance and the mental workout. Over-relying on AI in this way gives a false sense of knowledge and can make your thinking bland.

Good Uses of ChatGPT:

Use it as an assistant to augment your learning and productivity.

For example, I might ask, “Explain in simple terms what components go into an iPhone, and provide some reputable sources I can read.”

That’s a great use. The AI can break down a complex topic for me and even point me to further reading.

Or, say I’m analyzing Apple’s financial statements: I could have ChatGPT quickly scan the latest 10-K and the previous year’s 10-K to highlight what changed significantly (but always double-check, ChatGPT hallucinates from time to time.) That’s a tedious task for a human, but easy for an AI, and it frees me to think about the implications of those changes.

Another good use is for brainstorming. Sometimes my article titles are too long-winded, and I struggle to simplify them. I’ll ask ChatGPT for 5 alternative title ideas. Honestly, most of its suggestions will suck as they often sound corny or clichéd. But even the bad ideas can spark a thought in my own mind. In fact, I often end up combining two of its mediocre suggestions into a title that does work. In this way, the AI helps me get unstuck creatively.

How do you use AI?

The key is that I’m still in the driver’s seat: I use ChatGPT to handle grunt work and to jog my thinking, not to think or create final output for me. This approach keeps my mind engaged rather than atrophied. And interestingly, many other writers seem to be figuring out this balance too.

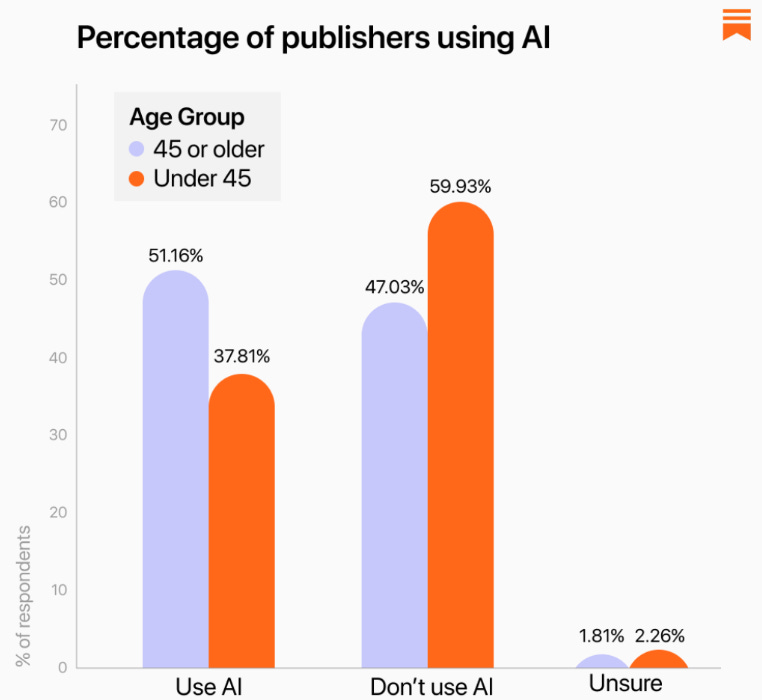

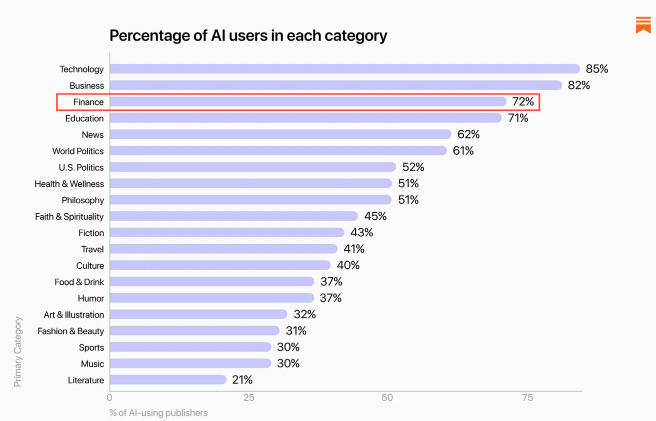

Substack (the newsletter platform I write on) recently surveyed over 2,000 writers about AI. The results showed a pretty sharp split: 45% of Substack writers said they aren’t using generative AI at all, while about 52% are using it in some form. The survey also found some patterns. Older writers (over 45) were more likely to use AI than younger ones (51% vs 38%).

In general, writers in fields like business, tech, and finance use AI far more than those in music, literature, or art.

It’s fascinating: those of us in analytical domains are eager to have AI help with research or data, while many artists and literary folks shun it, maybe fearing it could water down human creativity.

The Substack report noted that the conversation is often about AI content generation and its impact on art and authenticity. A lot of creative writers feel protective of the human element in their work. And I sympathize, I don’t want a robot to write my thoughts of the week for me either!

On the flip side, some writers (even in that survey) pointed out that tools like ChatGPT can be empowering if used right. For instance, helping people with dyslexia or ADHD to organize their thoughts, or enabling blind writers to “see” via descriptive AI tools.

So, it really comes down to how we use it. Use AI to enhance your work and overcome hurdles, yes. But don’t use it as a replacement for doing the thinking, or you’ll never produce anything that isn’t cookie-cutter.

LLMs Are Powerful, But They Can’t See the Future (Yet)

Why do I insist that asking ChatGPT for an “investment thesis” or a stock pick is a bad idea?

Because LLMs aren’t oracles, they’re pattern recognizers. These AI models are trained on past data (basically, everything humans have written up until their cutoff date), and they’re designed to predict likely next words based on that training.

That means they do great with topics that are well-covered in the training data. If you ask ChatGPT to explain how an iPhone is assembled or to summarize Apple’s business model, it will do a decent job, because those facts and patterns exist in the data.

In technical terms, an LLM is performing a kind of interpolation. It’s stitching together patterns it has seen before in a coherent way. But what happens when you ask it something genuinely new, like “Will Apple’s next product be successful?” or “What’s a good new business strategy for Apple?” Now you’re asking it to extrapolate beyond the data… to venture into the unknown future.

ChatGPT can remix what it’s seen, but it can’t truly invent or predict beyond that. It has no actual understanding of Apple’s strategy or the geopolitical risks in Taiwan or anything that isn’t already in its training text. It can’t form a view on a future event that hasn’t been extensively written about.

This is why expecting ChatGPT to be a genius stock picker is misguided. The weakness comes from not understanding what an LLM is. It’s a bit like expecting a brilliant historical encyclopedia to suddenly start predicting tomorrow’s stock prices.

ChatGPT doesn’t have a crystal ball; it has a vast library of what has been written before. So if you ask it for an investment thesis on Apple, it will regurgitate the consensus of what’s been said about Apple up to the knowledge cutoff date. You’ll get something like, “Apple is a strong company with a loyal customer base, diversified products, services revenue, blah blah,” which is all true but completely obvious.

There will be no bold insight like “Apple’s next product will create a new market” or “Apple is actually going to face a huge downturn in China” unless those points were already common in the sources it read. In investing, making money often requires having a view that’s a bit different from the herd and being right. An AI that only knows the herd’s past thinking is inherently behind the curve.

For more on this, see my piece Unpopular But Profitable: Why Contrarian Investors Outsmart the Herd.

I’ve seen some folks try elaborate prompt engineering to force ChatGPT into “analyst mode” or give it tools to browse recent information, hoping it will spit out golden stock picks. There was even an experiment where someone gave GPT access to recent news and asked it to manage a portfolio over time. The results were mixed at best. In one year-long experiment (with real money), a GPT-4-powered strategy did manage to beat the S&P 500 Index by a few percentage points, but only because it made a few very obvious bets, like going heavy into NVIDIA during the AI boom.

I find this pretty funny. The AI basically loaded up on the one company that makes the chips all the AI models run on. But was that really insightful analysis, or just luck/hindsight? When tried over many trials, sometimes the AI beat the market, often it didn’t, and its performance was inconsistent.

The takeaway isn’t “AI can pick stocks!”; it’s that if you throw darts, you’ll occasionally hit a bullseye. A blind squirrel finds a nut every now and then, as they say. Without true understanding or the ability to forecast new developments, the AI’s “insights” are mostly rehashed data points.

Now, could a future AI, Artificial General Intelligence (AGI) or even a superintelligence, actually do what a great human investor does and better?

Possibly, yes. If we eventually build an AI that truly understands the world, has common sense, and can model complex future scenarios, then all bets are off. That would be a game-changer (and not just for investing but for everything!).

But current LLMs like ChatGPT are not there. They don’t truly think; they simulate thinking by stitching together what thousands of humans have said. That’s why I feel investing, especially the kind of forward-looking, thesis-driven investing I do, will be one of the last jobs to be fully automated by AI.

In the meantime, what AI can do is take over a lot of the grunt work of investing (data gathering, basic analysis), which is great! I use it for that, and it makes me more efficient.

But when it comes to making the final judgment call:

“Do I believe in this company’s vision? How do I weigh the risk of a Black Swan event? Is now the right time to buy?”

…those are deeply human judgments (for now).

Why Investing (and Judgment Jobs) Are Hard for AI

Investing, at its core, is about dealing with uncertainty and making decisions with incomplete information. It’s not just crunching numbers; it’s understanding human behaviour, geopolitics, technology trends, and more. There’s a reason why in every big investment debate, you’ll find smart people on opposite sides.

Some investors think a stock will go up, while others are equally convinced it will go down. They’re all looking at the same data, but they interpret the future differently. That creative interpretation and risk-taking are why markets exist. If the future were obvious and all information known, there’d be no difference in opinion, and probably no opportunity to outperform anyone else.

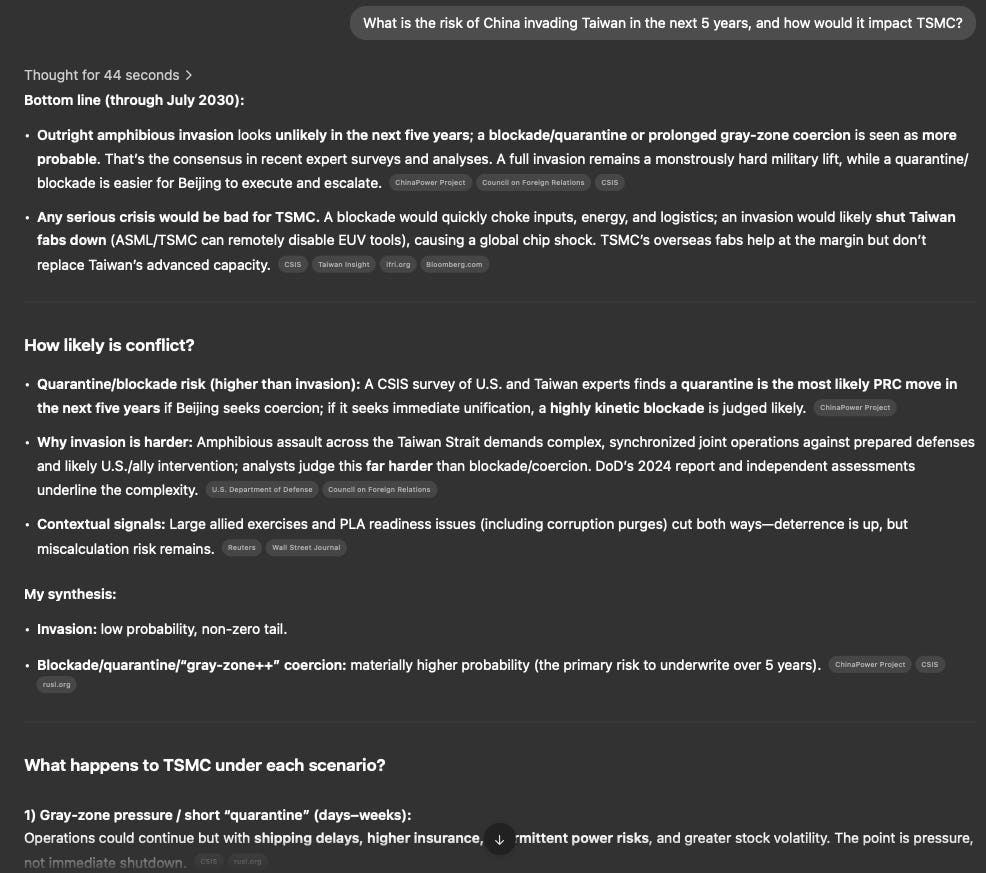

Let me give a concrete example I grappled with recently: the risk of China invading Taiwan, and what that would mean for a semiconductor company like TSMC, as I explained in my TSMC deep dive. This is a scenario that could have enormous implications for tech and the global economy.

But try asking ChatGPT, “What is the risk of China invading Taiwan in the next 5 years, and how would it impact TSMC?” I did. The answer you get will be a long, wishy-washy paragraph that basically goes, “Tensions have been rising, some experts say China might attempt it eventually, others say it would be too costly, it could disrupt the semiconductor supply chain, etc., etc.”

In other words, a summary of known facts and opinions, nothing you couldn’t have cobbled together from reading a few newspaper articles. It won’t give you a bold prediction or a probability, or a stance. It can’t, because there is no consensus in its data on that question (how could there be? It hasn’t happened yet!).

Only a human (or a very advanced AI far beyond today’s) could weigh the intangibles, the political will, the likelihood of U.S. intervention, the internal Chinese dynamics, and come up with a subjective probability like “I think there’s at least a 30% chance in the next 5 years, and if it happens, TSMC’s stock could drop 50% overnight.”

And another human might say, “No, I think it’s 5% chance at most, not in that timeframe.”

These are judgments, not facts.

ChatGPT doesn’t do judgments.

So if I, as an investor, relied on ChatGPT’s answer, I’d just get the illusion of an analysis (“the AI said lots of words so it sounds like a thorough consideration”), but in reality, I’d have added zero new insight to my decision. Worse, I might lull myself into a false certainty because the AI sounded confident in its very general answer.

This is where humans still have an edge.

We can say, “Alright, consensus (and ChatGPT) can’t make up its mind on China/Taiwan. Let me analyze military readiness, political speeches, historical precedents, etc., and form my own view.” And maybe I’ll conclude something non-consensus like, “Actually, I think the risk is higher than people realize.” If I’m right (and positioned accordingly), that’s how I add value as an investor. If I’m wrong, well, that’s the risk I take, but that’s the game. ChatGPT, on the other hand, will never stick its neck out one way or the other. It has no neck to stick out! By design, it’s a probabilistic parroting machine that leans toward the safe, averaged answer. It’s biased to sound reasonable and centred. Great for avoiding crazy advice; terrible for finding an edge.

Another point: Investing is not purely intellectual; it’s also psychological.

The best investors often have qualities like discipline, intuition, contrarianism, and even emotional resilience (to not panic during downturns). Can an AI be taught those? Perhaps, but today’s systems certainly don’t have an “intuition” or the kind of gut feel a seasoned human develops. And they don’t (yet) experience emotion, so they can’t analogize the feeling of, say, market euphoria or panic, in the way a human who’s lived through crashes and bubbles can.

Some jobs that require understanding human nature, like managing a team, counselling someone, or yes, making certain investment calls, might hang on with humans longer because they involve that human-to-human insight and trust. If I’m running a fund, I suspect my investors (people who give me money to manage) want to know I’m making the call, not a black-box AI that might do something unpredictable. At least for now.

AI Is Coming for Some Jobs Sooner Than You Think

I don’t think AI will replace investors anytime soon, but it is already eating into other jobs. Look at driving. Robotaxis run without a human in the seat in places like Phoenix and San Francisco. Cruise, Waymo, and Zoox are proving the concept. Some prototypes do not even have a steering wheel.

Trucking may flip even faster. Highways are simpler than city streets. The economics are obvious. A truck that runs almost around the clock without mandated rest changes cost structures. We are already seeing fully driverless freight runs on fixed routes in Texas. Others will follow as safety data piles up.

Give this a decade or two, and human driving could become rare on major roads. Once autonomous vehicles beat human safety records by a wide margin, pressure will build to make them the default. It will start with freight and taxis, then spill into consumer cars.

It is not just vehicles. Customer support, basic coding, simple content, and routine legal drafting are being shifted to AI because they are repetitive, well-structured, and trained on vast amounts of past data. Creative work resists more.

Even the Substack survey hints at this split. Arts and literature writers hesitate, while business and tech writers use AI as a helper for research and grunt work.

And finance is not immune. In my OPFI deep dive, the company’s credit model, Model 6, now auto-approves about 80% of applications without a human in the loop, and you can see the payoff in the financials with faster underwriting, lower unit costs, and solid credit performance.

The point is simple. AI will take over many tasks, but not all at once and not evenly. It will hit routine, high-volume jobs first. Judgment-heavy work with human nuance will take longer. That is exactly why I think investing stands near the back of the line.

Hype vs. Reality: Patience, Humans…

I have to talk about the hype cycle. The AI boom today sometimes reminds me of the dot-com boom. In the late 90s, everyone thought the internet would revolutionize everything immediately. People were quitting jobs to join any startup with “.com” in the name.

A lot of money was made and lost on those assumptions. The core idea (the internet changes the world) was absolutely right, but it took time for the infrastructure and business models to mature.

I see a parallel with AI.

I do believe AI will change everything … in time. But we’re probably overestimating how quickly and seamlessly it will happen. There are technical hurdles, regulatory hurdles, and social acceptance issues that will cause friction.

For example, technically, we have a demo of driverless trucks, but scaling that to every highway and every weather condition is an enormous task. Regulators might slow down approvals if accidents happen. Society might push back (as we saw in San Francisco, where residents at one point were placing cones on robotaxi hoods to disable them as a protest).

With AI content generation, we’ll grapple with issues of authenticity, misinformation, and copyright; already, big debates are raging on those fronts. So while I’m integrating AI into my workflow and staying abreast of it for investments, I also remind myself that big technological shifts often take longer to fully play out than the initial burst of enthusiasm suggests.

In that gap between expectation and reality lies opportunity and risk.

If everyone assumes AI will solve everything by next year, they might invest blindly or make poor business decisions. I try to be more nuanced: which AI advances are real and here today, which ones are likely but still a decade out?

For instance,

Replacing truck drivers: I’d bet on that for the 2030s.

Replacing portfolio managers: probably further out, unless we’re talking about purely quantitative funds (those are already basically AI-driven to an extent).

Replacing novelists or artists: maybe never entirely, because human-created art has its own appeal (and humans enjoy art because it connects to human experience).

That Substack survey I cited earlier illustrates this divide in sentiment. It showed that the creator community is deeply divided on AI: roughly half embracing it, half rejecting it, with the conversation colored by both excitement about new possibilities and fear for the future of creativity.

One could read that and say, “See, lots of writers aren’t using it, AI won’t take their jobs.” But remember, in 1999 some journalists said “I’ll never use the internet for my work”, fast forward and, well, here we are.

So I suspect many who resist now may eventually adopt AI in some form, or see their industry changed around them. At the same time, those who think AI can do everything already will find out its limits (sometimes in embarrassing ways, like publications that tried AI-written articles, which turned out full of errors).

For investing in particular, I think the hype will meet reality in an interesting way: AI will become a ubiquitous assistant in finance, parsing filings, doing risk analysis, maybe even executing algorithmic trades. But the strategy and higher-level decision-making will remain human for quite a while. The market is ultimately a human system driven by human emotions, unpredictable events and stories. This reminds me of my piece on storytelling. Read Storytelling is a double-edged sword, so be careful.

Until AI can understand and predict those better than us (which might require some form of general intelligence), it will be an aid, not a replacement, for an investor’s judgment.

Conclusion: Partner with AI, Don’t Surrender to It

I’m genuinely excited about AI, and I use it weekly. But I use it deliberately and skeptically. It’s a bit like having a very smart intern who can dig up info and draft things, but who also has no real-world experience or intuition. You wouldn’t let the intern run your whole portfolio, but you’d certainly leverage their help. That’s how I view ChatGPT and other LLMs.

I titled this piece “Why I think investing will be one of the final jobs taken over by AI,” and I stand by that. Investing is an arena that rewards original thought, contrarian insight, and understanding subtle human factors, things that current AI struggles with. Many jobs that are more routine or data-driven are already being eaten by AI (drivers today, perhaps radiologists tomorrow, etc.), whereas the “judgment” jobs will fall later.

That said, eventually is a long time. I’m not saying investors will have jobs forever. If I project far enough out (decades, maybe), who knows, maybe an AI will crack the code of the market (or the market itself will change form due to AI). But by then, society will have had to navigate a lot of other AI disruptions, and we’ll be in a very different world.

For now, my message is: embrace AI as a tool, use it to sharpen your work, not to do your thinking for you. Keep doing the hard thinking, keep nurturing those human insights that AI can’t reach (yet). That’s how we stay relevant and even ahead of the machines. After all, if everyone else gets lazy and lets ChatGPT churn out the same bland analyses, the few humans who still bother to think independently will be the ones coming up with the truly interesting theses and reaping the rewards.

In the end, the edge in investing (and many fields) comes from doing what others aren’t doing. In a world racing toward AI-everything, using AI wisely while preserving your human creativity might just be the ultimate edge.

Portfolio Update

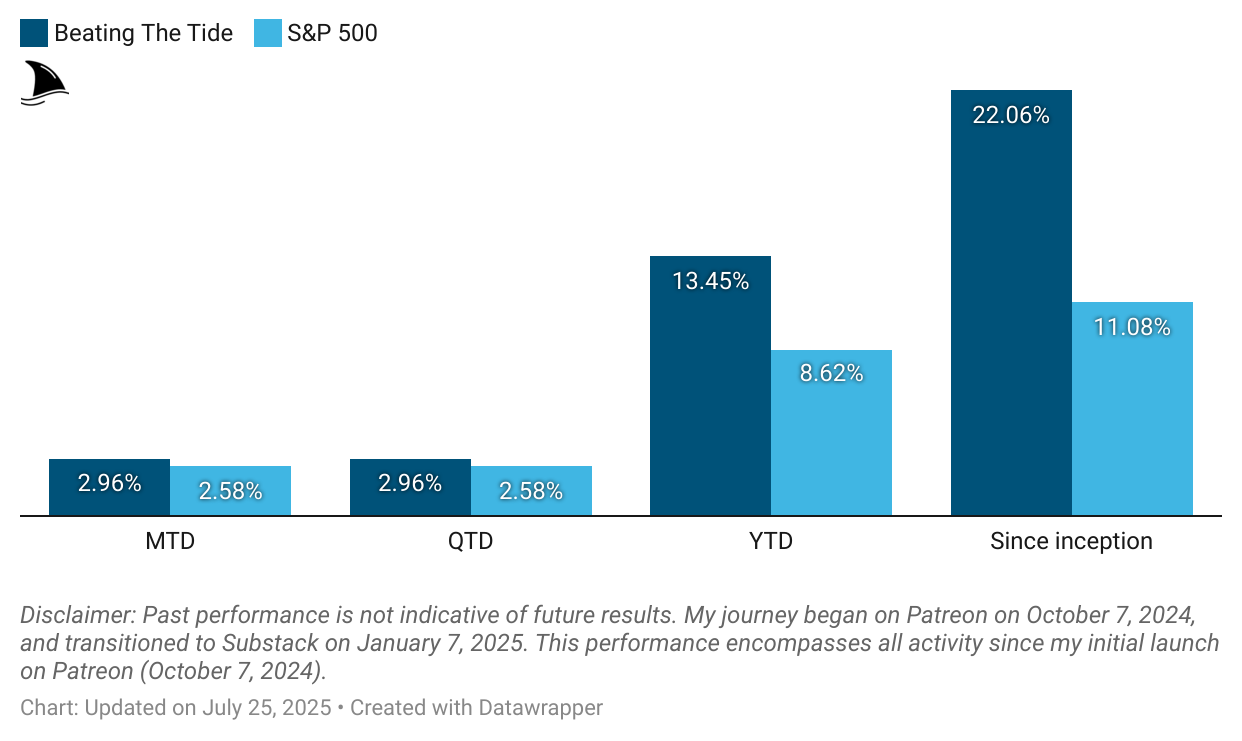

This week, we bounced back and widened the lead vs. the market.

Month-to-date: +3.0% vs. the S&P 500’s +2.6%.

Year-to-date: +13.5% vs. the S&P’s +8.6%, a gap of 483 basis points.

Since inception: +22.1% vs. the S&P 500’s +11.1%. That’s 2x the market.

Portfolio Return

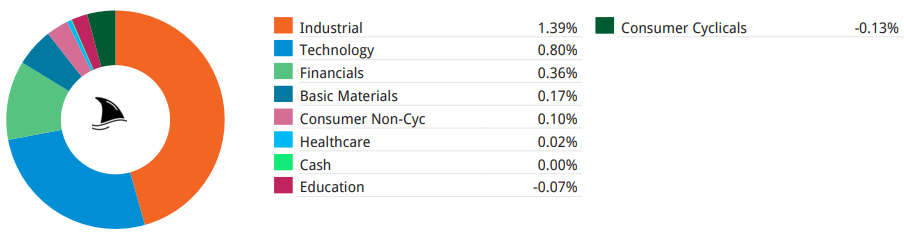

Contribution by Sector

Industrials and tech continued leading the gains, partially offset by consumer cyclicals.

Contribution by Position

(For the full breakdown, see Weekly Stock Performance Tracker)

+51 bps CLS 0.00%↑ (TSX: CLS)

+46 bps AGX 0.00%↑

+30 bps DXPE 0.00%↑

+23 bps POWL 0.00%↑

+12 bps TSM 0.00%↑

+6 bps MFC 0.00%↑ (TSX: MFC)

-2 bps KINS 0.00%↑

-3 bps LRN 0.00%↑

-7 bps OPFI 0.00%↑

That’s it for this week.

Stay calm. Stay focused. And remember to stay sharp, fellow Sharks!

Further Sunday reading to help your investment process:

There are far more intelligent use cases of AI for analyzing stocks than the ways described in this article. AI is a tool for most people, but it’s a super enhancer for only a small number of investors that truly respect the immense thinking power embedded in LLMs.