My 2025 Stock Pick Returned 134%. Here’s My Top Pick for 2026

The AI memory bottleneck Wall Street is still mispricing and why Micron sits right in the middle of it.

Last year I called Argan (AGX 0.00%↑) as my 2025 top pick (here). With 2 days left in 2025, AGX has returned +134% in 2025…

… but +171% since it was included in the portffolio.

That proved a tough act to follow, but after vetting a lot of ideas, I filtered it down to 19 names of which only 6 survived after the second round. In the final round of cuts, there were just four left: a European bank, consumer staples, a transportation play and one in semiconductors.

For a moment I considered issuing a ‘Top 4 Stocks of 2026’ but that would be no fun (don’t worry, most likely the other three will be released as my monhtly stock picks). But then when I remembered I was trying to pick the top stock for 2026, one stock stood out with the clearest 2026 catalyst: Micron Technology (MU 0.00%↑).

Trade alert:

Buy Micron Technology (MU); 2.5% of the portfolio

I’ll be honest. I really wanted my top pick for 2026 not to be AI-related. If you look at my portfolio, most of the biggest winners come from the AI theme.

In a nutshell, Micron is the memory giant at the heart of the AI boom. Demand for its DRAM, HBM, LPDDR, GDDR and flash is exploding thanks to data-center GPUs and edge devices running new AI “agentic” models and inference workloads. I believe Micron’s sales and profits will set records in 2026-27. My DCF suggests a fair price of $480 per share.

TLDR

MU sits in the middle of the AI buildout because AI is a memory problem as much as it is a compute problem. Every layer of the stack needs more memory: HBM in data center accelerators, DDR5 in servers, LPDDR in phones and edge devices, GDDR in certain GPU tiers, and NAND in SSDs.

That matters because the most expensive part of a high-end AI GPU is not the GPU die, it is the memory and packaging around it. Independent cost work suggests HBM plus advanced packaging can make up about two-thirds of the bill of materials in a top-tier NVIDIA GPU. MU’s seat in that supply chain is real. MU is also benefiting from tight supply. Management says it has already locked in price and volume agreements for its entire calendar 2026 HBM supply, and it expects tight conditions to persist beyond 2026.

In fiscal Q1 2026 Micron posted $13.6B in revenue, up 57% y/y, with $10.8B from DRAM and $2.7B from NAND, while gross margin jumped to about 57%. Management called it a quarter of record achievements across total revenue, DRAM, NAND, HBM, and data center. That is why I think MU can outperform in 2026 even after the rally.

My DCF uses an 9.6% WACC and supports a much higher fair value, and peer multiples still treat MU like a commodity memory name. I think that gap closes as AI inference expands across cloud, edge nodes, and on-device.

Risks still exist. Memory stays cyclical. Competition is real (Samsung, SK hynix, and China’s NAND push). But with Micron’s node progress, AI-driven mix shift, and a clear 2026 catalyst tied to contracted HBM supply and rising DRAM content, this is my top stock pick for 2026.

Table of Contents:

Company History and Evolution

Micron began very humbly. In 1978 a group of four engineers founded Micron Technology in a Boise, Idaho, dental office basement. By the early 1980s they built tiny DRAM chips; in 1983 they introduced the world’s first 256K DRAM. Through the ’80s and ’90s Micron grew via product innovation and acquisitions (for example acquiring Japan’s Elpida in 2013) and by becoming a Fortune 500 company by 1994.

Today MU is one of the largest memory makers globally. It pioneered or raced to lead transitions like 1γ (one-gamma) DRAM nodes and 232-layer NAND. The company’s fabrications plants are mainly in Idaho (US), Japan, and Taiwan, with new U.S. fabs now in the works thanks to CHIPS Act funding.

Key executive: Sanjay Mehrotra now CEO and Chairman, has steered MU through cycles since the 2010s. Under Mehrotra it has refocused on high-value memory segments, partnering on AI chips, and investing heavily in next-gen memory. Micron’s culture is engineering-driven but capital-intensive; margins swing with market cycles (when prices collapse, MU loses money, as happened in 2023).

How Micron Makes Money (Products and Segments)

MU’s business splits into two main product lines: DRAM (volatile memory like DDR) and NAND flash (non-volatile storage). But before we dig into the numbers, let’s really understand the products. People lump “memory” into one bucket. It is not one thing.

DRAM (main memory)

DRAM is the computer’s working desk. It holds the stuff your CPU or GPU needs right now. Open a browser tab. Run a spreadsheet. Load part of an AI model. DRAM holds that active data.

DRAM is fast. It is also temporary. Turn the power off and it forgets everything.

In data centers, DRAM shows up as DDR5 sticks in servers. That is Micron’s bread-and-butter.

HBM (High Bandwidth Memory)

HBM is DRAM on rocket fuel. It does the same job as DRAM, but it moves data to the GPU much faster. It sits extremely close to the GPU and connects with a very wide “data highway.” That wide highway lets the GPU grab huge amounts of data per second.

HBM matters most for AI accelerators (like NVIDIA’s big data center GPUs) because those chips need to pull mountains of data constantly. When the GPU waits on memory, it wastes money.

HBM is expensive. It is also supply-constrained. That is why it drives so much of the AI hardware cost.

LPDDR (Low Power DRAM)

LPDDR is DRAM designed for batteries. Same idea as DRAM, but optimized to use less power. That is why you find LPDDR in phones, tablets, laptops, and edge devices. It helps keep devices cool and extends battery life.

As on-device AI features grow, LPDDR content per device tends to rise. More AI = more “working desk space” needed on the device.

GDDR (Graphics DRAM)

GDDR is DRAM tuned for graphics and certain accelerators. It sits on graphics cards and some AI inference cards where you want strong bandwidth, but you do not want to pay HBM prices.

Think of it like this:

HBM = fastest highway, most expensive

GDDR = strong highway, cheaper

DDR = solid highway, cheapest in servers

GDDR often shows up in gaming GPUs and “value tier” data center cards that still need lots of memory bandwidth.

Flash (NAND)

Flash is long-term storage. It remembers things even when the power is off.

Flash stores:

your photos

apps

operating system files

databases

AI model weights sitting on SSDs before they get loaded into DRAM

Flash is slower than DRAM, but it is cheaper per gigabyte and it does not forget.

Most flash today is NAND, and it shows up inside SSDs in data centers and PCs, plus phone storage.

This is why Micron matters. It sells into all of these buckets. Different memory types win in different parts of the AI stack.

In 2025, Micron sold about $28.6 B of DRAM and $8.5 B of NAND, for total revenue $37.4 B. DRAM accounts for 75% of sales. It then segments revenue by end-market:

Cloud & Enterprise (CMBU): This was $13.52 B (36% of 2025 sales), mainly server DRAM and HBM for data centers. Key products: HBM for GPUs, DDR5/DDR4 DIMMs for servers, LPDDR5 for server/edge, and GDDR6 for AI accelerators.

Cloud & Datacenter Storage (CDBU): $7.23 B (19%), mostly SSD controllers and NAND flash for data centers. Products include DRAM-enhanced SSDs and enterprise NAND like 7400/9500 series.

Mobile & Compute (MCBU): $11.86 B (32%), for smartphones, tablets, PCs. Products: mobile LPDDR DRAM and managed NAND (e.MMC/UFS).

Automotive/Embedded (AEBU): $4.75 B (13%), for cars, IoT, industrial. Products: automotive-grade DRAM (LPDDR, DDR4), embedded flash, and GDDR6 for auto-GPUs.

Overall, Micron charges for each gigabyte of memory. Unit economics vary: HBM and server DRAM sell for hundreds of dollars per chip, while mobile DRAM and consumer flash sell much cheaper. Margins are highest on new-node server products (HBM, DDR5), and lowest on commodity items.

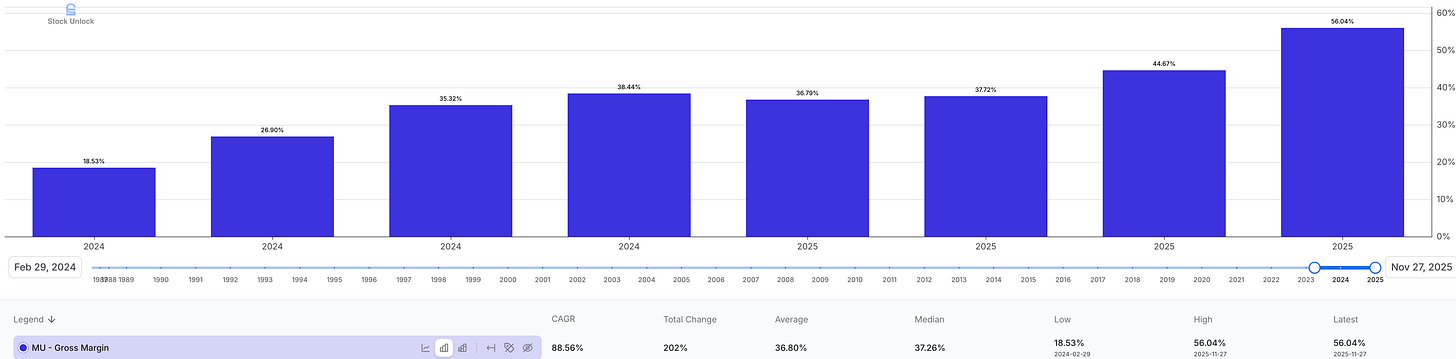

In FY2025, Micron’s gross margin was 40% (recovering from 22% in FY2024). R&D expense was $3.8 B (10% of sales) and SG&A ~$1.2 B (3%), reflecting intense tech spending.

Micron’s customers include top cloud providers ( GOOG 0.00%↑, AMZN 0.00%↑, META 0.00%↑, MSFT 0.00%↑, OpenAI etc.) for data-center chips, all major PC and mobile OEMs, and governments and defense contractors for specialized memory. Notably, half its revenue comes from 10 customers, so concentration is high (more on that as a risk later).

AI Inference and Memory Boom

The big catalyst is AI inference. Previously, memory demand was driven by PCs, phones and servers on a gentle secular growth. Now, every generative-AI application, whether in a cloud data center or on-device, is memory-hungry.

A quick detour. A GPU is a chip built to do lots of math in parallel. Think of it as a factory with thousands of tiny workers. Each worker does simple operations fast, and together they chew through the matrix math that AI models rely on. But those workers are useless if they sit around waiting for data. That’s where memory comes in.

If you want a broader look at how different AI accelerators are designed, and why those design choices matter for performance and memory demand, I break it down here.

AI inference is basically “read model weights + read the prompt + do the math + write the result.” The model weights live in memory. The activations live in memory. The GPU has a small amount of ultra-fast SRAM cache on-chip, but it is nowhere near enough to hold modern models. So the GPU leans on external HBM or GDDR to keep feeding those compute units. If bandwidth is too low, the GPU starves. If capacity is too small, the model does not fit. That’s why memory is not a side ingredient. It’s the limiting factor.

In practice, the GPU and memory behave like a coupled system. More compute without more memory bandwidth does not help much. That’s also why the newest AI GPUs keep adding more HBM stacks and faster memory interfaces. The “GPU” story is increasingly a “memory” story.

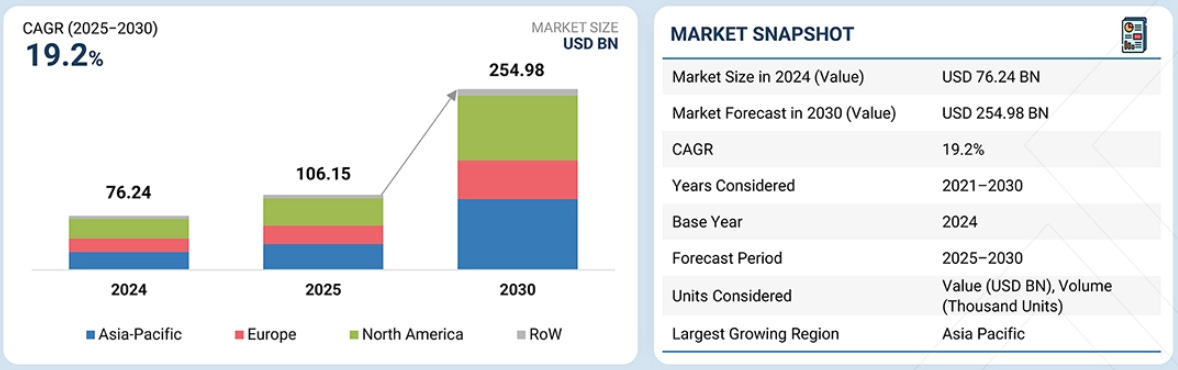

According to McKinsey research, AI inference workloads are rapidly growing and will soon dominate AI compute: by 2030 inference will be >50% of all AI data-center compute (training was the big part before). The AI inference market itself is forecast to jump from about $106 B in 2025 to $255 B by 2030 (CAGR ~19%).

And this spike isn’t limited to hyperscale data centers. AI models are also being deployed on edge and mobile devices (“AI on the phone/camera/car”), which in aggregate drive enormous memory use as well.

For example, edge inference chips still need local DRAM or HBM interfaces, and on-device LLMs require LPDDR. The net effect: virtually every chipmaker (GPUs, TPUs, networking ASICs) is now a MU customer, and all are bottlenecked by memory supply.

A simple example. I gifted my wife the Ray-Ban Meta glasses for Christmas so she could record more memories with my daughter. In practice, I’ve ended up using them more than she has so far :)

These glasses don’t use exotic AI hardware. They run on a mobile system-on-chip with LPDDR memory and flash storage, similar to what you’d find in a smartphone. No HBM. No giant GPUs.

Yet even in this early, not-that-smart version, they already need local memory to store photos, video, audio, and to run lightweight AI features like voice commands and Meta AI.

And this is just the starting point.

As these devices add real-time translation, vision models, and on-device assistants, memory requirements grow fast. Multiply that by millions of wearables, phones, cars, cameras, and edge devices, and you start to see why memory demand doesn’t just come from data centers. It creeps into everyday hardware.

In the NVIDIA GPU like the new Blackwell (B200), the memory costs dominate the bill of materials. EpochAI broke down NVIDIA’s $6,400 per-B200 cost: 45% ($2,900) is HBM3E DRAM, 17% ($1,100) is advanced CoWoS packaging, and the GPU chips themselves are <15%. In other words, nearly two-thirds of a high-end AI GPU is MU ’s product or is related packaging. When each hyperscaler orders racks of those GPUs (or custom AI engines), Micron’s revenue and pricing power follow.

Micron has noticed this. At its Dec. 2025 earnings call, the CEO said Micron has now “completed agreements on price and volume for our entire calendar 2026 HBM supply including [HBM4]”. That means that every wafer of HBM that Micron can make in 2026 is already sold under contracts and that’s at higher prices than a year ago.

Management forecasts the total addressable HBM market will grow 40% per year from 2025 ($35 B) to 2028 ($100 B). By 2028, HBM alone could surpass the entire 2024 global DRAM market in dollars!

This tidal surge is not limited to HBM. All legacy DRAM types have seen strong demand: CFO Mark Murphy guided fiscal 2026 revenue of $74 B, and HBM and 1γ DRAM are explicitly where most new capacity is going.

Micron’s client Nvidia [NVDA 0.00%↑] is the epicenter of AI GPUs, and MU works closely with them. Every new NVIDIA accelerator from Hopper to Blackwell has relied on Micron HBM and GDDR, and Micron is on track to support the next generations too (HBM4 in H200/Next, etc.).

On the servers side, Micron’s DDR5 modules and now CXL-memory buffer chips feed Nvidia GPUs and CPUs. The synergy is direct: NVIDIA’s earnings releases often lamented that memory shortages and packaging constrained them. In short, as NVIDIA (and AMD [AMD 0.00%↑], Tesla [TSLA 0.00%↑], Google, Intel AI) build out AI infrastructure, Micron is the memory backbone.

Competitive Landscape (Peers: Samsung, SK Hynix, etc.)

MU competes with a few other memory giants. Samsung Electronics is by far the largest (it leads in both DRAM and NAND). SK Hynix (South Korea) is #2 or #3 in DRAM globally. Chinese firms like YMTC (Yangtze Memory) have recently emerged in NAND flash (driven by government support). MU even faces some overlap with Intel/Micron’s JV IMFlash Tech for some flash, although Micron now operates it alone.

In peer valuations, the market still prices Micron on low multiples.

Samsung has affirmed the memory rally too. Samsung’s memory segment surged, HBM fab deals, memory market tightness acute, HBM sold out through 2026, which implicitly benefits Micron as well (Micron’s outperformance lifted Samsung’s outlook as customers scramble for supply).

And YMTC is racing to build wafer fabs as China plans a third YMTC NAND fab by 2027. YMTC claims its 232-layer NAND is competitive with Micron’s and Samsung’s (though YMTC lacks cutting-edge lithography). So the competitive risk is that Chinese-subsidized players ramp up enough to spoil prices down the road.

Micron’s defense? Its technology and scale. In DRAM, Micron is at the leading 1γ (1-gamma) node alongside Samsung, whereas Hynix is a node behind. For NAND, Micron’s 232-layer QLC and upcoming 2nd-gen 176-layer (1-alpha) put it ahead of most peers. Micron has spent tens of billions on new fabs (largely funded by U.S. CHIPS Act grants) to build high-volume HBM and DRAM lines in the U.S., something its Korean peers cannot quickly match. This U.S. footprint is a strategic moat: it comes with $6.4 B in CHIPS grants for new fabs (including advanced HBM packaging capability).

As you can see, while Micron is not a monopoly, it’s one of only three players who can supply large volumes of cutting-edge memory. In an industry with brutal cycles and low margins during downswings, this industrial-scale presence is a real advantage.

Competitive Advantages

Advantage #1. Breadth of Product Portfolio

Micron is one of the few suppliers of all types of DRAM and NAND. It can cross-sell at every level: HBM at the top, then server DDR5, then mobile LPDDR, then GPU GDDR, and NAND/SSD at the bottom. That means MU benefits from any spike in memory demand from cloud, consumer, automotive, you name it.

Advantage #2. Technology and node leadership

On DRAM, Micron was first (with Samsung) to 1γ DRAM, which can be priced higher. Its 2024 chiplets move (splitting DRAM dies) improves yields on big dies. On HBM, Micron’s HBM3E and HBM4 lines are shipping with high yields. In NAND, Micron’s 232L QLC and NVMdurance tech gives longer endurance.

Advantage #3. U.S. manufacturing and subsidies

Thanks to CHIPS Act, MU has giant commitments to build new fabs in New York, Idaho, and Virginia. This U.S. base is strategic (reducing China risk) and is effectively subsidized by up to $6.4B in grants and tax credits. (For example, Micron’s new Clay, NY fab will produce HBM locally.)

CHIPS Act support helps, but it is not a blank check. The funding agreements come with rules. For five years after the award date (Dec. 9, 2024), Micron faces limits on special one time dividends, and it cannot just go wild with buybacks. During the first two years (so, through December 2026), share repurchases are only allowed up to specified amounts, mainly to offset dilution from employee stock compensation (or if the Commerce Department permits more). After that, buyback limits can loosen in the final three years if Micron meets certain conditions.

Advantage #4. Customer relationships and sticky contracts

Micron has multi-year agreements with the biggest hyperscalers and auto companies. At the Q1 call, the CEO said the HBM deals for 2026 are all “multi-year contracts…with specific commitments”. Such contracts reduce volatility as customers need guaranteed supply for their server builds.

Management and Insider Behavior

CEO Sanjay Mehrotra and CFO Mark Murphy have steered Micron for years. They are experienced but operate in a cyclical business. Management has warned investors about the cycle risks. For example, Mehrotra has said margins may compress after a high-growth phase. So they know cycles can turn. In recent quarters they’ve chosen to lean in: raising guidance in 2025 Q4 and upping fiscal-2026 capex to $20 B (from $18 B) to add HBM and 1γ capacity.

Insider stock activity is notable. In the past year alone, Dataroma shows zero insider buys and 140 insider sale transactions totaling $134,524,764.

The biggest cluster came from the CFO, who sold 126,000 shares on Oct 30, 2025 for $28M, split across six sales between $221.64 and $226.51 per share. Another large sale came from Scott J. DeBoer, EVP and CTO, who sold 82,000 shares on Oct 27, 2025 at $222.81 for $18M.

The CEO also sold repeatedly in smaller lots across late September through November 2025. Directors sold too, including April S. Arnzen (15,000 shares on Dec 22, 2025 for $4M) and Steven J. Gomo (5,000 shares on Dec 19, 2025 for $1.3M).

I treat this as a yellow flag, not a thesis killer. Insiders often sell for diversification and taxes.

I wouldn’t call Micron management a red flag. Senior executives do not own a meaningful percentage of the company today, but that is normal for a $300-plus billion market cap business. What matters more is the absolute exposure. The CEO still owns 747,000 shares, worth over $224 million at current prices. That is real money. It means management may trim for diversification or taxes, but they are still financially tied to the outcome.

Valuation

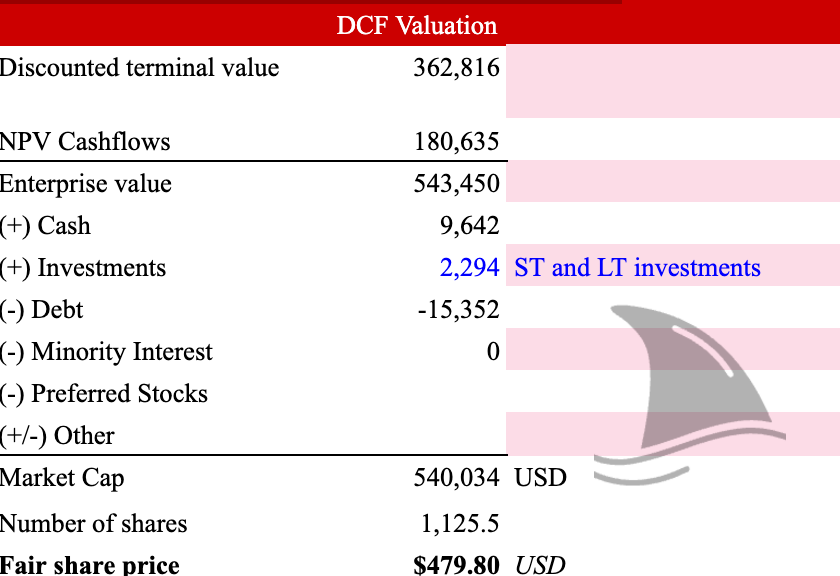

My DCF analysis (with a WACC of 9.6%, tax rate 15%, unlevered beta 1.46, debt ratio 40%) suggests the stock is undervalued at today’s levels.

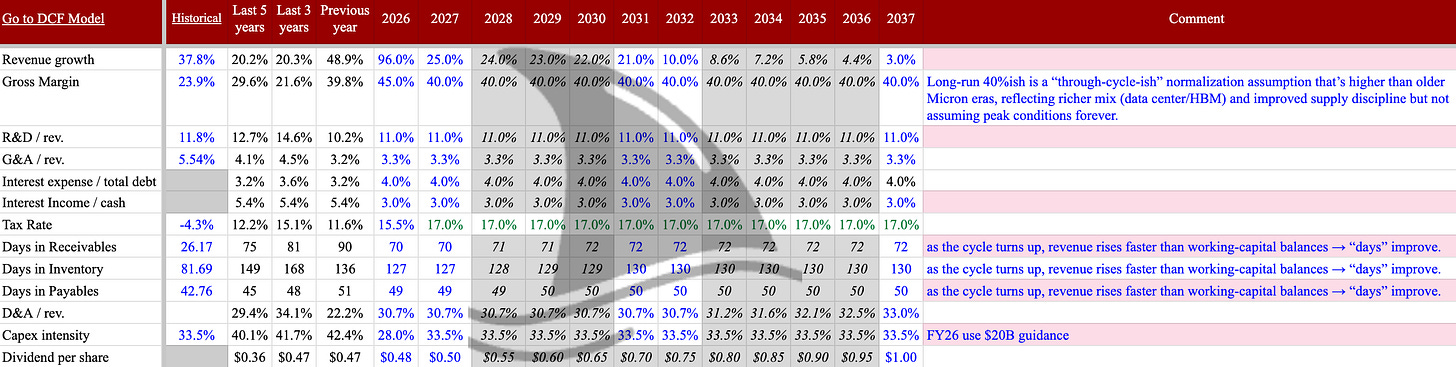

Here are my main assumptions:

Revenue growth: I project very high growth in 2026 (almost a double vs 2025) as AI builds out; then decelerating to mid-20% in 2027-28 as capacity catches up, then slowing further toward long-term industry growth.

Gross margins: I assume gross margins of 40% as a mid-cycle margin. This is lower that the 45% margin in Q4 2025 and 56% margin in Q1 2026.

Stock Unlock: my go-to tool for fundamentals. The free version is great, and if you upgrade, here’s my affiliate link for 10% off. R&D and SG&A: I assume R&D stays around 11% of sales, SG&A around 3.3%. These are roughly in-line with FY2025 (10.2% and 3.2%). In an AI boom, Micron could even invest more in R&D to stay ahead.

Tax rate: I use 15%, reflecting U.S. tax plus some international rates (Micron’s effective 2025 tax was low because of NOLs).

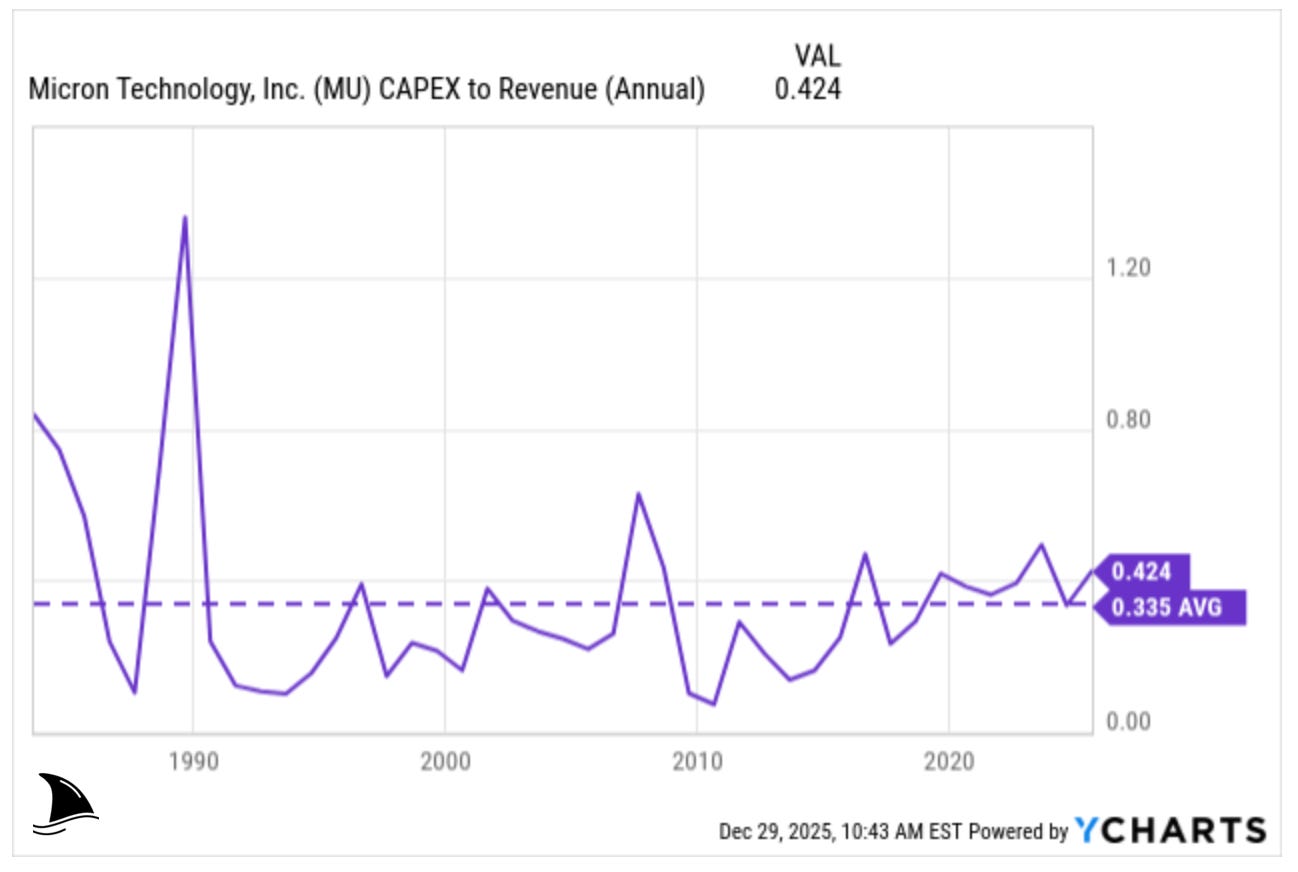

Capex: This is crucial. Micron’s Capex is very large to expand fabs. For 2026, management guided $20B, that is ~27% of FY26 sales but I assumed it a bit higher for that year. I assumed a long-term capex rate of 33.5% of sales which is consistent with the historical rate.

Working capital: I assume the days to improve as revenue scales up but I keep it consistent with Q1 2026.

Plugging all that, I get an equity value on the order of $480 per share.

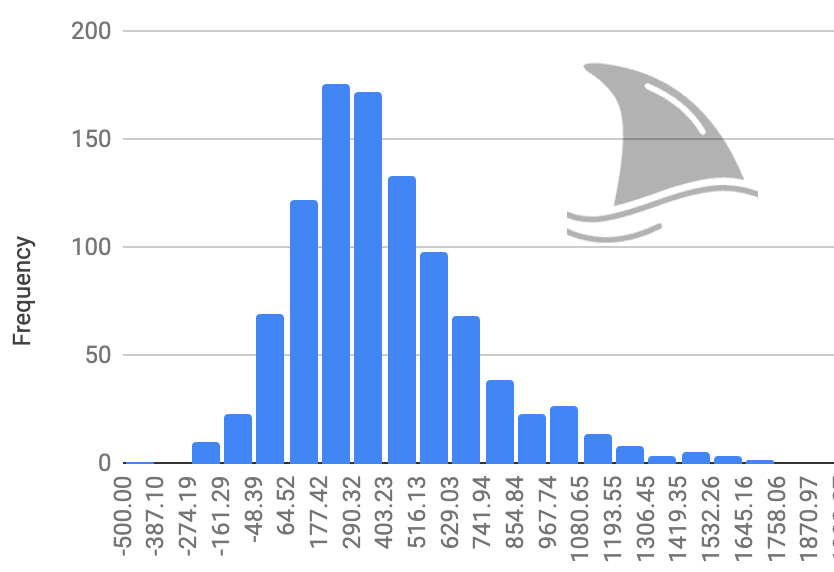

Monte Carlo check: how fragile is the valuation?

After running the DCF, I ran a Monte Carlo simulation to stress-test the result. I did not touch every input. I focused only on the variables that actually matter.

I randomized three assumptions:

Revenue growth from 2027 to 2031: Mean 25%, standard deviation 7%

Gross margin from 2027 onward: Mean 34%, standard deviation 10%

WACC: Mean 9.6%, standard deviation 1.5%

Everything else stayed fixed.

I ran the model one thousand times. Each run produced a different fair value based on those inputs. The chart shows the distribution of outcomes.

About 60% of the outcomes land above today’s stock price.

About 35% land above my $480 target price.

That tells me two things.

First, the thesis does not rely on perfect execution. Even when I let growth slow, margins compress, or capital costs rise, the stock still clears today’s price more often than not. That gives me margin of safety.

Second, the upside is not guaranteed.

This is how I like risk to look:

The left tail exists, because memory is cyclical.

But the center of gravity sits above the current price (it is not clear from the distribution but the mean value is $407).

The right tail is meaningful if the AI memory cycle lasts longer than the market expects.

In short, the Monte Carlo does not prove Micron is worth $480. It shows that the odds are tilted in my favor, even when I let the key assumptions move around.

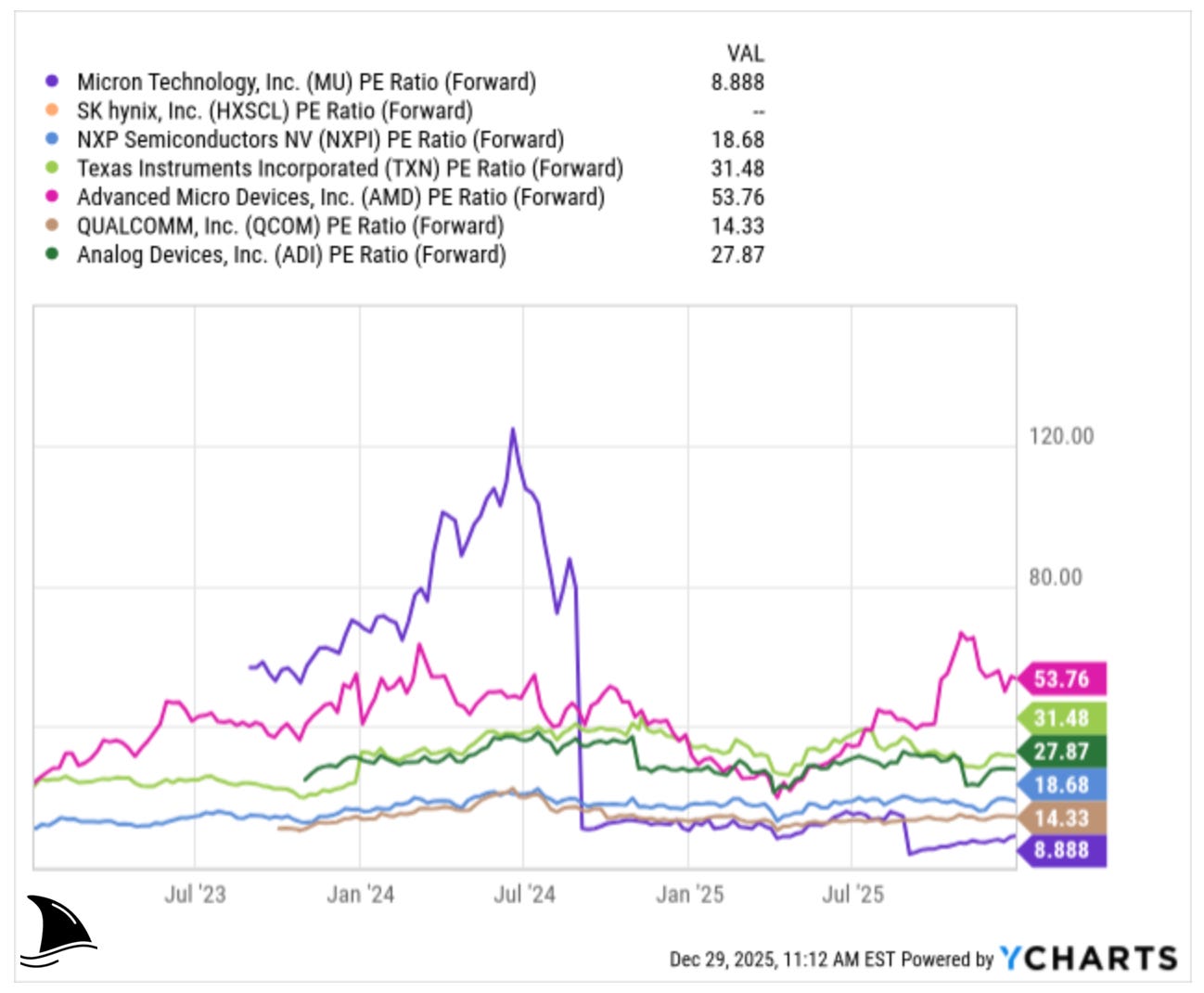

Peer check: MU looks cheap, even before I touch the DCF

I like to sanity check my DCF with a quick peer screen.

Micron is the cheapest stock in the set on the forward numbers.

MU trades at about 8.9x forward P/E. NXPI 0.00%↑ sits around 18.6x, QCOM 0.00%↑ 14.3x, TXN 0.00%↑ 31.5x, ADI 0.00%↑ 27.9x, and AMD 0.00%↑ is… in its own galaxy at 53.7x. SK hynix does not show a forward P/E in the snapshot, but that does not change the point. MU sits at the bottom.

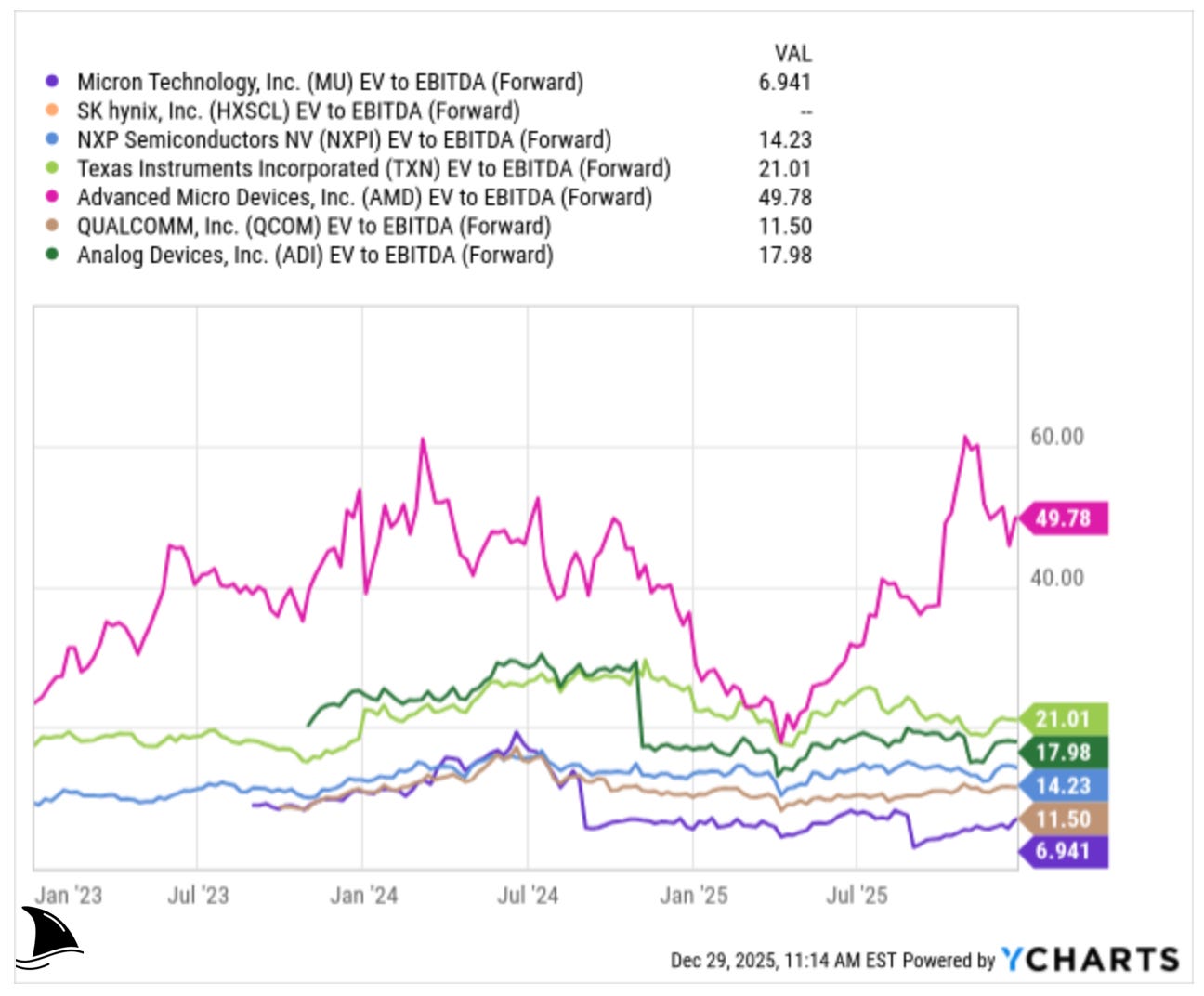

The same happens on forward EV to EBITDA.

MU runs at roughly 6.9x. QCOM shows 11.5x. NXPI sits at 14.2x. TXN at 21.0x. ADI at 18.0x. AMD is 49.8x. Again, MU is the cheapest.

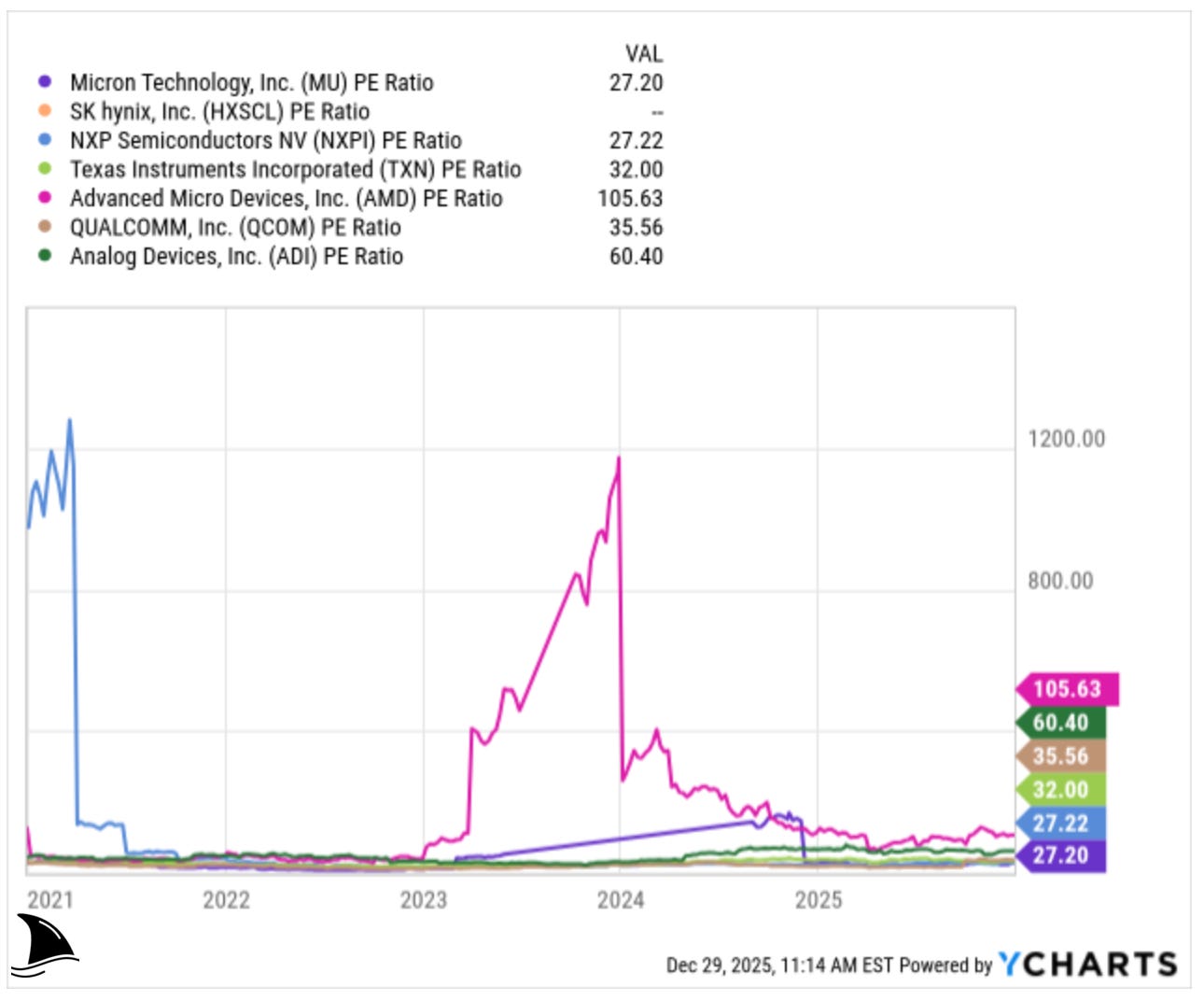

Even on the trailing numbers, MU still looks discounted.

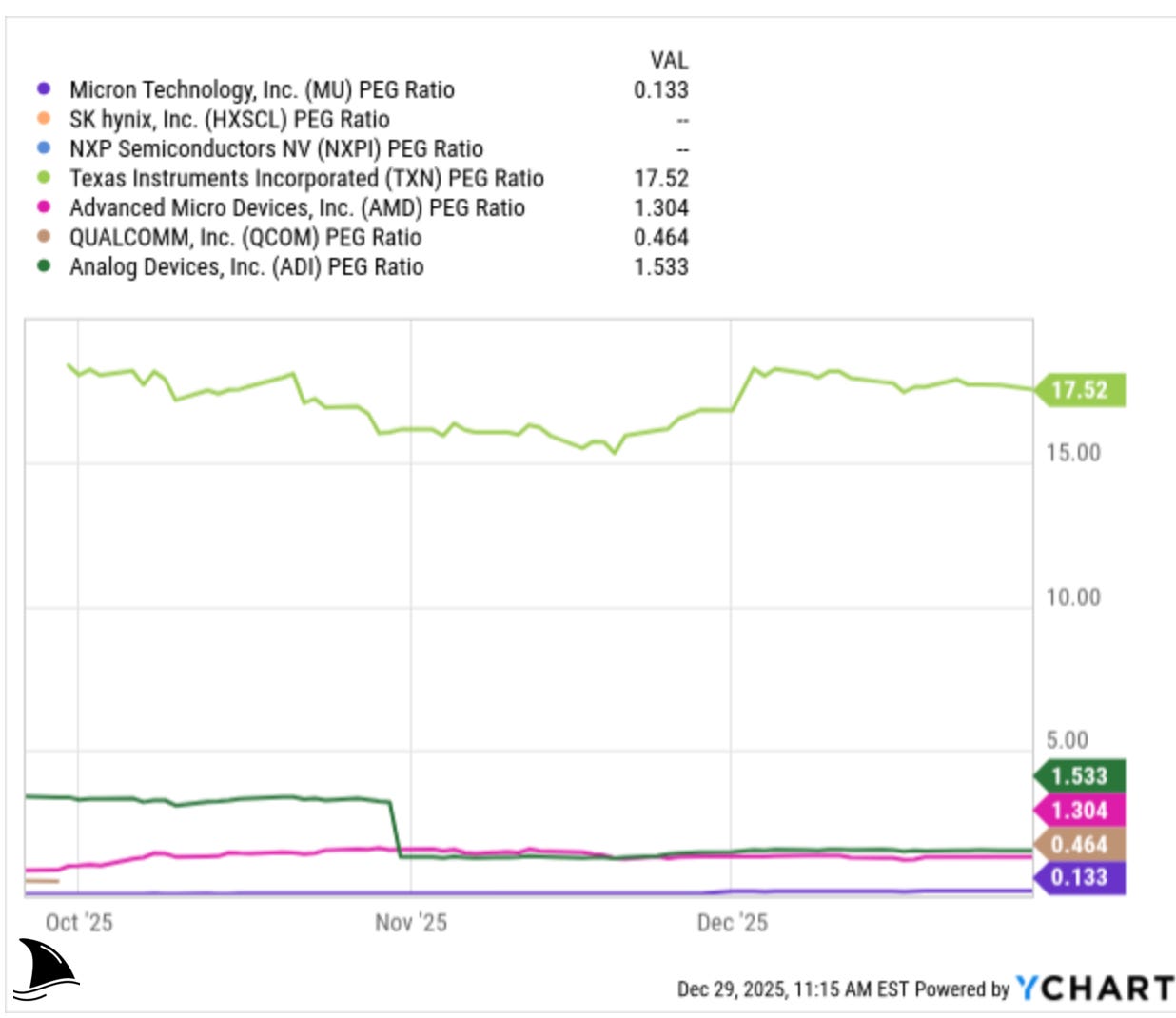

The cleanest way to see the discount is the PEG ratio.

MU shows about 0.13. AMD sits around 1.30. ADI around 1.53. TXN shows 17.5. That gap is massive. It tells you the market still prices MU like a cyclical rebound story, not like a multi year growth engine. That might be fair in a normal memory cycle. This cycle does not look normal.

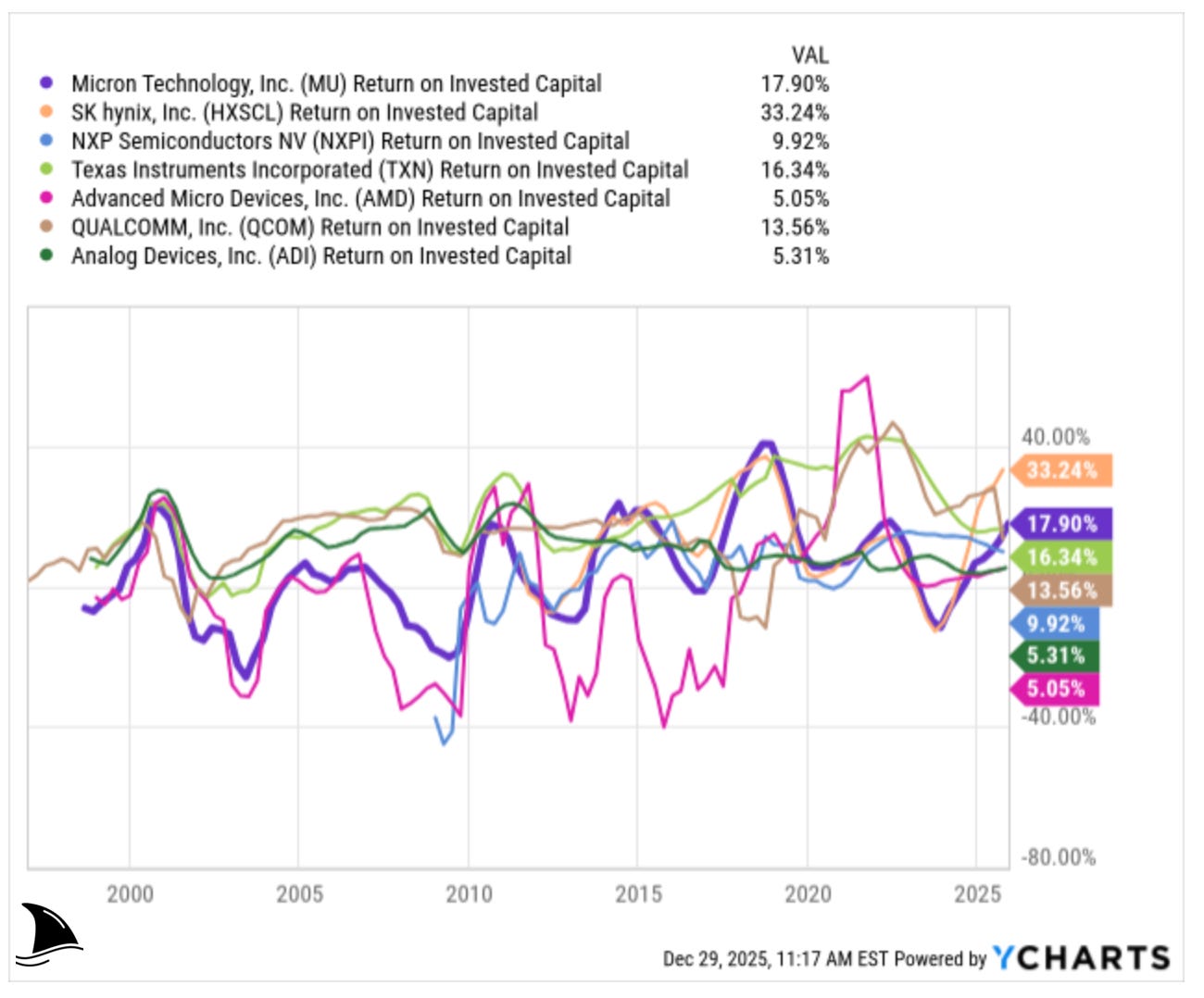

The ROIC chart screams “cyclical industry.” Still, MU now shows about 17.9% ROIC, which is second highest in the peer group, just behind SK hynix at 33.2%.

I expect Micron’s ROIC to keep climbing in this supercycle.

Why?

Mix and pricing. HBM and data center DRAM pull margins up. Contracts pull volatility down. Utilization improves. That combo pushes returns higher.

Why is SK hynix higher today?

HBM explains most of it. SK hynix has had a stronger HBM mix and, in practice, it has operated closer to the center of the HBM supply bottleneck. When you sell more of the highest margin product in the tightest part of the market, ROIC jumps fast. That does not mean Micron loses. It means Micron still has room to catch up as its HBM ramps and its mix keeps shifting toward data center.

Risks

Risk #1. Memory Cyclicality

Historically, DRAM/NAND pricing is extremely cyclical. When supply outpaces demand (or if AI hype fizzles), prices can collapse. For example, in 2022-23, DRAM prices plunged and Micron barely broke even. If AI spending disappoints or if new fab capacity (MU’s or competitors’) comes online too fast, Micron’s sales and margins could reverse sharply.

Risk #2. Competition

Samsung and SK Hynix can always respond with price cuts or faster tech. And I noted Chinese rivals: YMTC (NAND) and others (like Changxin for DRAM) are heavily subsidized to chase Micron. If those catch up with state-of-the-art nodes, it could erode Micron’s moat.

Risk #3. Customer Concentration

Over half of Micron’s revenue comes from just its top 10 customers, mostly hyperscalers and major OEMs.

This is a double-edged sword: it means huge volume agreements but also dependency. If any big customer reduces orders (say a large hyperscaler pauses investment), or if consolidation shifts power in negotiations, Micron could see revenue swings.

Risk #4. Technological Obsolescence

Non-DRAM memory types (e.g. HBM substitutes, or fundamentally new memory tech like MRAM) are always a theoretical threat. If the industry suddenly shifts architectures (e.g. local on-chip memory, or widespread use of very-high-bandwidth alternatives), Micron’s large DRAM/NAND fabs could become underutilized. That’s less likely in the near term, but it is a risk over a longer horizon.

Risk #5. Macro/Governance

Global tech competition and geopolitics can affect Micron. China’s “anti-competition” investigations or potential supply restrictions could impact sales. While Micron is building more U.S. capacity, it still had 10% of sales to China customers in 2025; any Chinese regulations or trade tensions could dampen sales. Management has disclosed those as risk factors (CHIPS Act funding also has milestones/clawbacks if requirements aren’t met).

Risk #6. Valuation / Execution Risk

Finally, the stock’s implied growth is very high. If Micron’s execution falters such as delays in ramping new processes, yield problems, or mishandled inventory, the market might lose confidence quickly. The high level of insider selling might already signal some caution (and it could weigh on sentiment if it continues).

Final Verdict

After weighing all factors, Micron is my top stock pick for 2026. It meets all my criteria for a top pick: it has a strong, simple story (AI-memory boom), proven execution (record revenues and margins in 2025), and relative value (cheap multiples compared to peers’ growth).

Yes, memory stocks can swoon on a dime, but right now Micron looks more like a tech growth name than a commodity: a company that should earn far more as we go through 2026. Its quarterly reports and guidance show a coiled spring. Management is plowing cash into expansion, and almost every new data center or 5G edge rollout will need Micron’s chips.

In a market where many tech stocks are richly valued, Micron stands out as a deep value story combined with a potential transformative cycle. MU fits my style: a big picture tech leader benefiting from a clear trend (AI), with solid fundamentals and multi-year upside. I wouldn’t bet on it being smooth (memory is volatile), but the risk/reward skews very positively to a higher price by this time next year, in my view.

George, would it still be worthwhile to start a position for MU now or is it too late at this point? I know you predict an upside of 22% from where we are today. Do you think the target price is likely to go even higher or is this the ceiling of the stock for a while now?

Compelling deep-dive on the memory-as-bottleneck thesis. The stat about HBM plus packaging making up 2/3 of GPU bill-of-materials really hammers home why Micron's already-contracted 2026 HBM supply is a differentiated position. What I found most interesting was the Monte Carlo showing 60% of outcomes above current price even when you stress gross margins and growth, which suggests the downside case is alrady somewhat priced in. That's the kind of asymmetric setup that makes cyclical plays worthwhile.