The Need for Speed: From Home Wi-Fi to Celestica’s 800G Advantage

How Celestica (CLS) is riding the shift from 400G to 800G and soon 1.6T, why Nvidia–OpenAI–Oracle’s billion-dollar triangle matters for its growth, and my revised target price for CLS shares.

Here we are again, looking at what to do with CLS stock. I refreshed the valuation seven weeks ago, and the stock has already hit—and passed—my target, which means it’s time for another review and a chance to factor in the benefits from the new OpenAI–Nvidia partnership.

Funny enough, I was just frustrated with slow internet at home.

My home office has a Mac Mini (where I do all my writing), a PC (for trading), a travel laptop, and a NAS that backs up my data every night.

Even though my internet is 500 Mbps, Fast.com kept showing much slower speeds. Meanwhile, my smart TV streamed 4K movies without a hiccup. Then it hit me: the TV was on Ethernet, but all my computers were on Wi-Fi. When I set up the office, I never ran cables as the router is in the living room, and I didn’t want unsightly wires snaking across the floor (and my wife didn’t either).

So last Saturday I finally fixed it. I bought the cables, covers, and an Ethernet switch and hard-wired my devices. Instantly, my speeds jumped, and everything felt snappier.

That little home-networking upgrade got me thinking: if 1 Gbps makes such a difference in my house, imagine the scale needed in an AI data center. In those massive server farms, thousands of AI servers and GPUs have to talk to each other at lightning speeds. This is where 800 Gbps switches come in, and it’s a big reason I remain bullish on Celestica.

Celestica (CLS 0.00%↑) isn’t a household tech name unless you have been following Beating The Tide. CLS has become a critical supplier behind the scenes of the AI revolution. The company builds high-speed 800G networking equipment that keeps AI supercomputers fed with data. In the same way my 1G switch relieved a bottleneck in my home network, Celestica’s 800G switches are relieving bottlenecks in the world’s most advanced data centers.

This is in no means a deep dive on CLS, for that, you can check out my deep dive from April of this year.

In this piece, I’ll explain why these 800G devices are so important, how Celestica is benefiting, and why I’m raising my target price on the stock from $253 to $320 per share.

Home Wi-Fi vs. AI Data Centers: Scaling from 1G to 800G

My home networking saga is a tiny example of a universal truth in tech: as demand grows, networks must scale up. In a data center running AI models, the demand is astronomical. Think of a cluster of AI servers (each with multiple GPUs) all needing to exchange huge volumes of data in real time. A standard 1 Gbps link (like in home internet) is nowhere near enough. Even the 400 Gbps switches that were cutting-edge a couple of years ago are becoming a bottleneck.

Enter 800 Gbps (800G) switches. The next generation of ultra-fast data plumbing for AI. These switches can handle double the throughput of 400G models, allowing cloud giants to connect servers and GPU pods at twice the speed.

Where is 800G used? Primarily in the data centers of hyperscalers. Whenever you hear about an AI training cluster with thousands of GPUs working together (for example, training large language models like GPT-4), there’s an immense amount of data shuttling between those GPUs.

800G switches provide the highways for that data. They sit in server racks, linking machines together so that no single GPU is starved of information. In effect, 800G networking gear is mission-critical for AI infrastructure. Without it, those multi-billion-dollar AI superclusters can’t reach their full potential.

Celestica recognized this need early and positioned itself as a key supplier of these high-speed switches. The company had already been building 400G switches for major cloud customers, and as those customers began shifting to 800G, Celestica was ready to supply the new gear.

In the words of Celestica’s CEO, “…every 400G customer we had has turned into an 800G customer…”. He noted on the Q2 2025 earnings call that Celestica’s market share is actually larger in 800G than it was in 400G, thanks to early wins with big clients.

In practice, this means all of Celestica’s top hyperscaler customers have now started ramping their 800G programs. One of these top-three customers sharply increased 800G orders in Q2, and the other two are catching up in the second half of 2025. The 800G transition is well underway, and Celestica is at the heart of it.

Why does this matter so much for AI?

Because as AI models get more complex, the network can be the limiting factor. You can pack more GPUs into a cluster, but if the connections between them are slow, training times won’t improve. By doubling network speeds from 400G to 800G, cloud providers ensure that their expensive AI hardware is fully utilized.

In short, 800G is the enabler for scaling AI workloads. And Celestica enjoys a strong “follow the money” tailwind: whenever a hyperscaler budgets billions for AI, a slice of that is going into networking hardware that Celestica builds. It’s a classic picks-and-shovels play in the AI gold rush.

Celestica’s 800G Windfall and the 1.6T Horizon

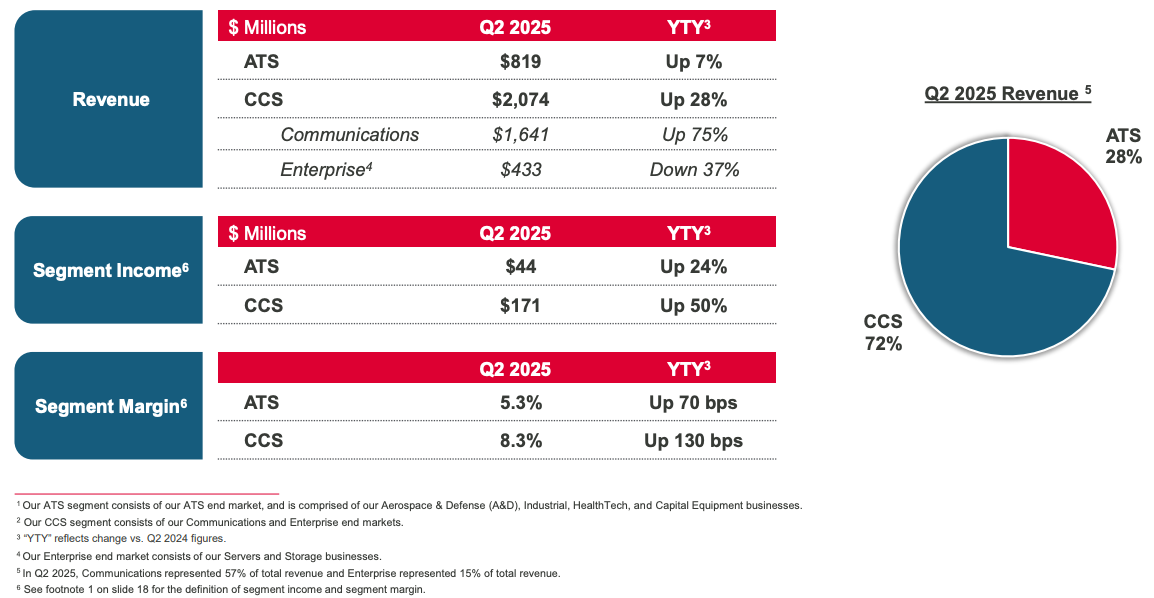

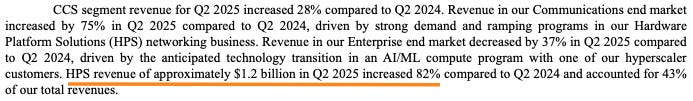

The impact of the 800G ramp on Celestica’s numbers has been dramatic. In the second quarter of 2025, Celestica’s CCS division (which houses the data center networking business) grew revenue 28% y/y, handily outpacing its other division.

In contrast, the ATS segment (which includes lower-growth businesses like aerospace and industrial) grew only about 7%. The result is that CCS now makes up the majority of Celestica’s business and is pulling up the company’s overall growth rate and profitability.

The 800G cycle is not a one-and-done story, either. It’s part of a continuum of network upgrades that will continue as AI workloads scale. Once 800G is widely deployed (likely through 2025), the industry will set its sights on the next jump: 1.6 Tbps (1600G) switches.

In fact, Celestica is already preparing for that. On the earnings call, CEO Rob Mionis revealed that Celestica “has been awarded our second 1.6T switching program with another large hyperscaler, which will begin ramping in 2026.” This new program involves designing and producing an AI-optimized networking rack (with advanced cooling technology) for the customer.

This is a big deal: it means Celestica’s clients trust it enough to collaborate on the bleeding edge of network tech. By 2026, when 1.6T switches start rolling out, Celestica should have an inside track thanks to these early design wins. And remember, every time network bandwidth doubles (400G → 800G → 1.6T), it enables larger AI clusters and more powerful models. Celestica stands to ride each of those waves, just as it’s currently riding the 800G wave. The CEO himself put it plainly: the success Celestica is having in 800G “could give it an upper hand when the next upgrade comes.”

Nvidia and OpenAI’s 10GW Gambit: More Fuel for AI Infrastructure

The AI arms race took a dramatic turn recently with a partnership between Nvidia (NVDA 0.00%↑) and OpenAI. Just a few days ago, the two companies announced plans to deploy 10 gigawatts of NVIDIA AI systems for OpenAI’s next-generation AI infrastructure.

To put that in perspective, 10 GW is an almost unfathomable amount of compute. We’re talking about millions of GPUs worth of capacity, built out over the next few years. The first 1 GW of this will come online in late 2026, and Nvidia is so confident in the plan that it’s willing to invest up to $100 billion in OpenAI to support the rollout.

Why does this matter for Celestica?

Because all that planned AI compute has to live somewhere, in some data center, connected by somebody’s hardware. OpenAI doesn’t build its own servers or networking gear from scratch; rather, it partners with cloud providers and hardware experts.

Nvidia, of course, will supply the GPUs and systems. But Nvidia isn’t in the business of manufacturing every piece of a data center; they rely on a vast supply chain. Celestica is very likely part of that chain, at least indirectly.

For example, Microsoft (MSFT 0.00%↑), a major backer of OpenAI, could be the one hosting this 10GW of compute in its Azure data centers. And Microsoft is known to be a Celestica customer for certain data center hardware. Even if not Microsoft, the “broad network of collaborators” which includes Oracle (which has already announced a massive cloud services deal with OpenAI), SoftBank, and others will all need to massively scale their AI infrastructure, from racks and power supplies to high-speed switches. These are all areas where Celestica has offerings.

The Nvidia–OpenAI announcement basically telegraphs that AI capex will remain in high gear for years to come. As Jensen Huang (Nvidia’s CEO) put it, this partnership is “deploying 10 gigawatts to power the next era of intelligence.”

For Celestica, more AI capex across the industry means more demand for the kinds of products it makes. It’s impossible to pinpoint exactly how much of that 10GW build-out will flow into Celestica’s order book, but the directional impact is clear: it’s positive.

This was arguably the most significant AI infrastructure news of the year, and it reinforces the idea that Celestica’s biggest customers (the hyperscalers) will be racing to deploy bigger, faster AI farms. They’ll need 800G and later 1.6T networks to do it. In short, Nvidia and OpenAI just gave the whole AI supply chain (including CLS) another green light to keep expanding.

It’s worth noting that Celestica’s management has already been upbeat about the demand outlook prior to this news. On the Q2 2025 call, the CFO highlighted that hyperscaler demand remains very strong through the back end of this year and that Celestica has visibility with customers into the first half of 2026, with “no slowdown” in sight. The company is even beginning to ramp a next-gen AI compute server program for a large hyperscaler in Q3, indicating a continual pipeline of new projects.

This aligns perfectly with what we’re seeing from Nvidia/OpenAI. The big players are in an expansion mode, not a pause. That adds confidence that Celestica’s growth surge has legs well beyond just a quarter or two.

The Oracle–OpenAI–Nvidia Triangle: Money, Chips, and Cloud

The 10GW plan sits inside a much bigger set of commitments. Oracle announced a $300B cloud services deal with OpenAI, while Nvidia is pledging up to $100B to OpenAI to pre-finance GPU purchases. Oracle (ORCL 0.00%↑), in turn, has to buy billions of dollars of Nvidia chips to deliver that capacity. It’s a high-stakes loop that benefits each party but also raises questions about sustainability and counterparty risk. There are countless memes going around touching this point that I have found hilarious.

I don’t want to pull this piece too far away from Celestica, but this triangle is too important to gloss over. I’ll take a deeper look at these announcements in Weekly #49 this Sunday.

For now, the key takeaway is simple: these deals are bullish because each one points to more hardware orders for hyperscaler data centers, exactly the space Celestica supplies. But they also carry risk. If any of these players slow spending or renegotiate, the ripple effect could travel down the supply chain.

Valuation: Updating the Thesis and Raising the Target to $320

Back in early August (just after the Q2 results), I published an updated target price for Celestica at $253 per share, up from my prior estimate.

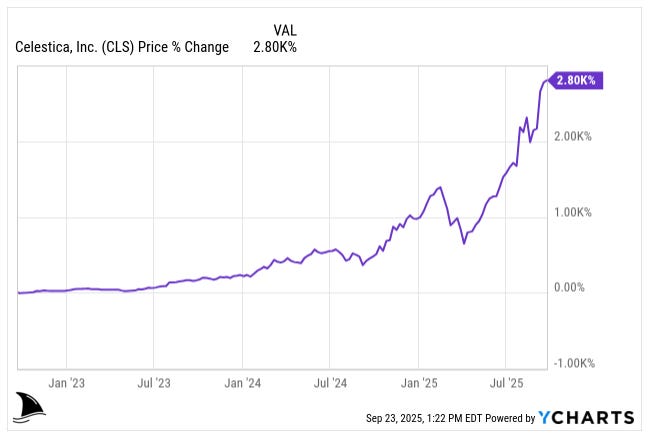

At the time, the stock had surged into the low $200s, and $253 represented what I felt was fair value based on the information then. In just 7 weeks since that revision, Celestica’s stock hit that $253 target.

The market essentially agreed with the bullish case faster than I anticipated. With the shares recently trading around $250, it’s time to reassess and see if there’s still upside ahead.

After incorporating the latest developments (especially the Nvidia/OpenAI 10GW news and Celestica’s continued outperformance) I’m comfortable raising my target to $320 per share.

Why $320?

Two main changes in my valuation assumptions drive this increase:

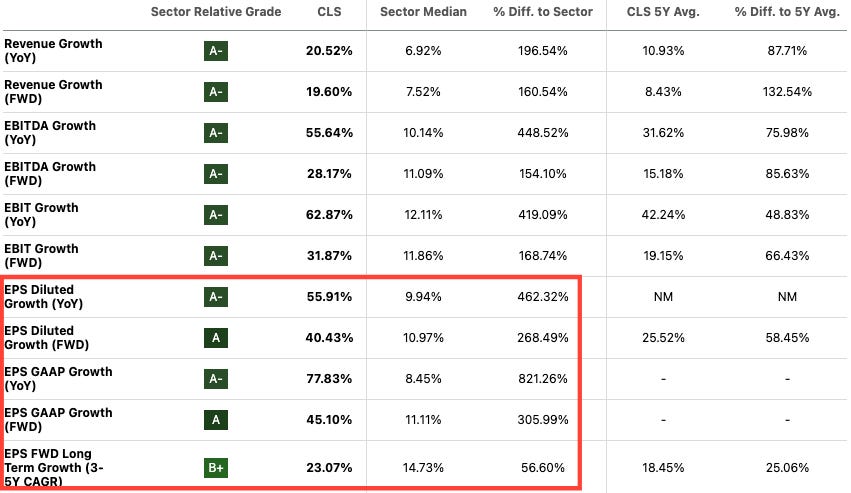

Adjustment #1. Higher Growth in 2025 for the CCS Segment

I am increasing my 2025 revenue growth assumption for Celestica’s CCS segment from about 27% to 35%. Simply put, I see no signs of slowdown in the demand for Celestica’s AI-related hardware; if anything, demand is accelerating.

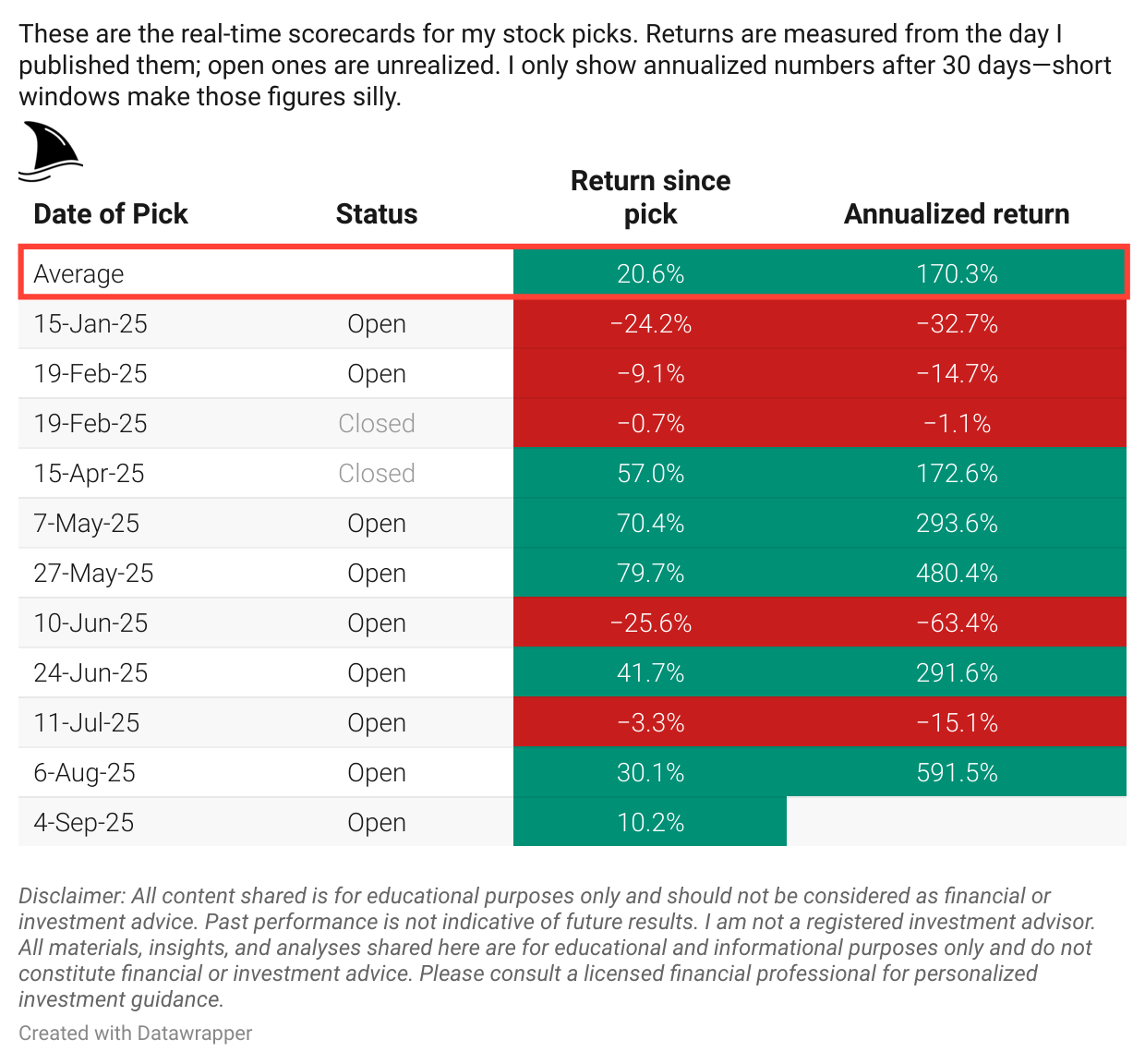

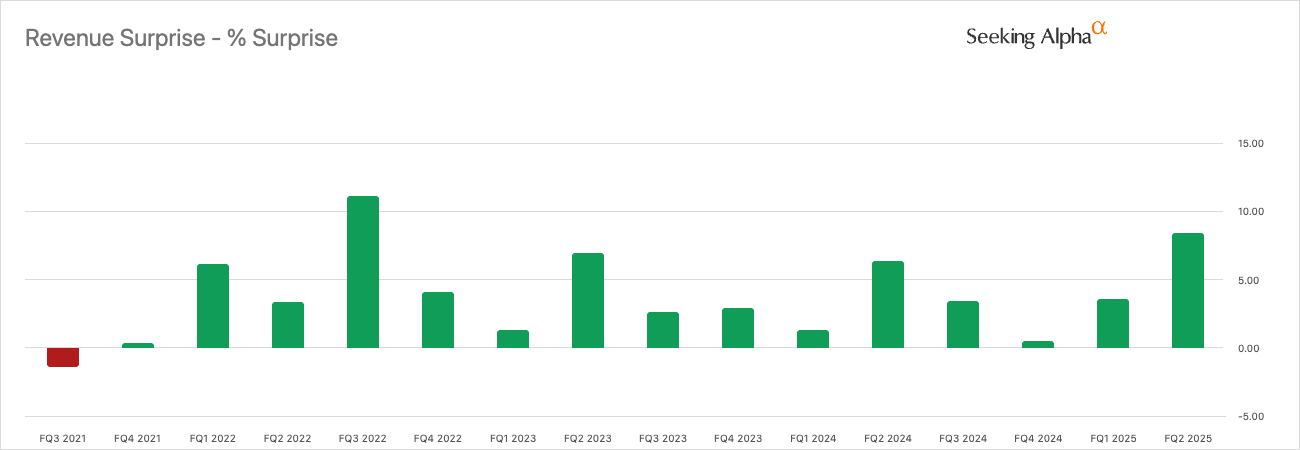

Management’s official guidance of roughly 30% CCS growth already looked conservative. Celestica has a clear track record of issuing cautious guidance and then consistently surprising to the upside, as shown by its repeated earnings beats over the past several quarters.

On the Q2 call, they raised full-year revenue guidance to $11.5 billion, but also said that figure built in a cushion for unforeseen events. With the quarter nearly complete, no such headwinds materializing, and even management admitting that the $11.5 billion outlook was conservative, this is yet another data point suggesting revenues could easily run above 30% growth.

Given that every one of Celestica’s top customers is now ramping 800G programs (and new programs like next-gen AI servers are starting up), a mid-30s growth rate for CCS in 2025 feels achievable. The Nvidia/OpenAI partnership solidifies this view: it signals that AI capex will remain a priority for the industry. Even if Celestica only captures a small portion of the OpenAI-related build-out, the broader “AI infrastructure boom” pie is getting larger.

Thus, I feel justified in pushing the CCS growth assumption a bit higher for our model. To be clear, I’m not counting on another 82% jump in HPS like this past quarter, but I do think Celestica can grow its cloud/comm revenues well above 30% for another year before things normalize.

Adjustment #2. Slightly Lower Cost of Capital (WACC)

I’m trimming my discount rate from about 8.7% to 8.3%. This may seem like a fine detail, but in a discounted cash flow valuation, a lower discount rate boosts the present value of future cash flows, raising the target price.

Why lower the WACC?

A few reasons:

Reason #1. Celestica’s revenue visibility and backlog

Those are better now than before, reducing the perceived risk. The company has orders mapping into 2026 for its largest programs, and a customer base that is investing aggressively. That reduces uncertainty in near-term forecasts.

Reason #2. The margin profile is stronger thanks to HPS

Higher margins and ROIC (Celestica’s adjusted ROIC hit ~35.5% in Q2 ) give a cushion to weather any hiccups, again lowering risk.

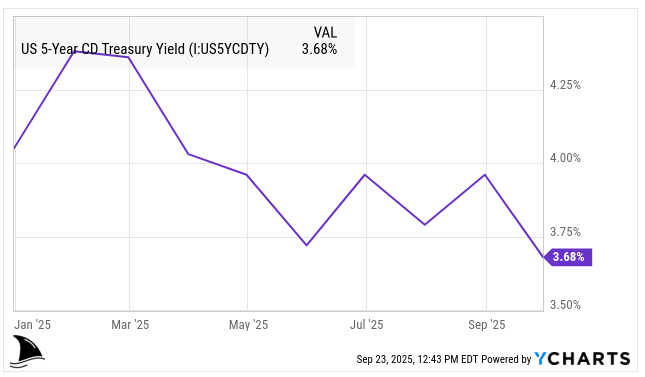

Reason #3. The macro environment has shifted a bit toward “risk-on”

We’ve seen a pullback in bond yields…

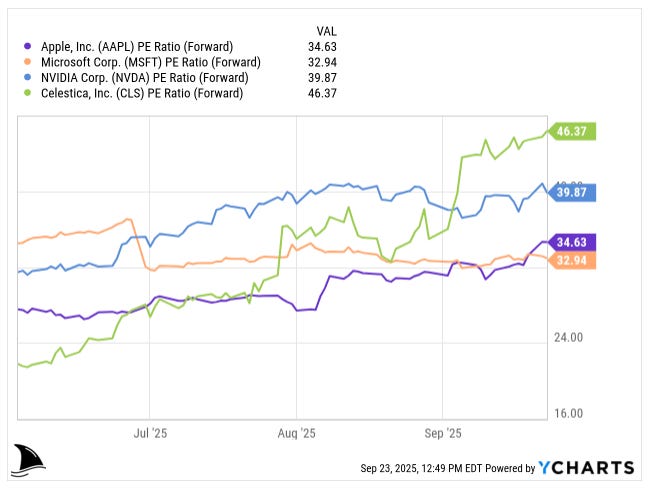

… and a resurgence in tech stock multiples in recent months.

Investors are generally accepting a lower equity risk premium for growth names. Celestica’s beta (volatility relative to market) might still be high, but the market’s tolerance for high-growth, high-multiple stories has improved. In essence, if investors are willing to value Celestica more like a secular growth tech company (and less like a low-margin manufacturer), the required return can be a tad lower.

Combining those two adjustments, I arrive at a fair value close to $320. This implies a valuation that, yes, is quite rich on traditional metrics, but I would argue it’s warranted by Celestica’s transformation.

At a $320 stock price and using the newly guided $5.50 EPS for 2025, the forward P/E would be about 58. That certainly sounds expensive, and indeed, its forward P/E is now higher than Nvidia’s.

Some bearish investors argue CLS is unfairly overvalued since Nvidia is a unique behemoth creating new markets, whereas Celestica is an enabler rather than an innovator.

I hear that critique.

It’s true Celestica is not about to invent the next GPU or set the AI algorithm agenda. However, a high P/E today is not the whole story. Celestica’s earnings are growing extremely fast (78% y/y and 45% forward).

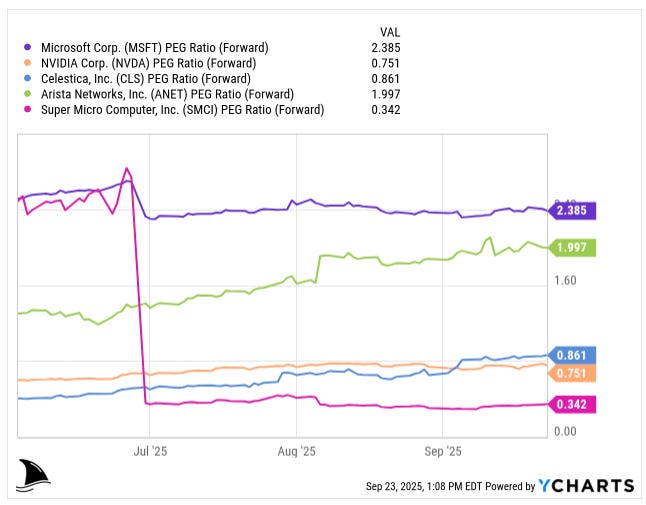

So its PEG ratio (P/E to growth) is actually reasonable. Celestica’s forward PEG is around 0.86, well below 1.0, which usually signals undervaluation for a growth stock.

For those still worried about the elevated share price: remember that we are in an AI supercycle. In such cycles, stocks can remain “expensive” for an extended period because new bullish developments (like that 10GW partnership) keep coming and overshadowing valuation concerns.

That doesn’t mean one should throw caution to the wind, but it means a stock like CLS can grow into its valuation rather than crashing back to a low P/E immediately.

Risk and Reward: Keeping Calm Through the Volatility

None of this is to say Celestica’s stock will go straight up. In fact, after a +2,800% run-up in three years, some consolidation or pullback is almost inevitable.

The stock has caught the attention of many investors and is no longer the under-the-radar bargain it once was. We’ve already seen brief bouts of profit-taking. For instance, one Seeking Alpha analyst downgraded CLS to a hold after Q2, citing the 26% post-earnings spike and stretched valuation metrics vs. peers like AMD and Broadcom. Another even slapped a “strong sell,” noting that on some measures, Celestica was trading at or above Nvidia-like multiples.

Skepticism is healthy here. A forward P/E in the 50s means the stock is priced for a lot of growth, and any stumble (an order delay, a margin miss, etc.) could cause a sharp drop.

These are yellow flags that often precede a cooling-off period. I wouldn’t be surprised to see the share price bounce around or even dip below $250 in the near term if the market rotates out of tech temporarily or if there’s a scare (e.g., a rumor of an AI spending pause).

However, volatility is the price of admission for outsized long-term gains.

The company’s customer base is concentrated. Its top 10 customers made about 73% of revenue in 2024, and its largest customer alone represented roughly 28% of total revenue that year (the second largest was 11%).

Management has said their goal is to have each of the big hyperscalers account for around 10% of sales rather than one dominant client at 40%, but as of the last filing the mix is still top-heavy. That said, the wins are spread across several hyperscalers and programs, which means the growth isn’t coming from just a single client.

Another risk to watch is component supply or technology transitions. For example, if a next-gen chip had delays, it could impact timing for Celestica’s programs. Also, as Celestica takes on more design work (ODM projects), it bears more responsibility, which is great for margins but means they need to execute on more complex deliverables (like that whole 1.6T rack design). It’s not a trivial task, but so far they’ve proven capable.

From Bargain to Compounder: How I’m Thinking Now

In weighing all this, I maintain a calm, long-term perspective. The temptation after a huge rally is either to get greedy (“this is the next Nvidia!” euphoria) or fearful (“it’s a bubble about to burst!”). I’m trying to avoid both extremes. Celestica at +$250 is no longer the screaming bargain it was at $10 or $50 or $100, those days are gone.

From here, the stock’s trajectory will mirror its earnings growth more closely. And I happen to believe those earnings will keep rising strongly for the next couple of years, driven by the trends we discussed: 800G deployment, the upcoming 1.6T cycle, and relentless AI investment by big players. So even if the stock is choppy quarter to quarter, I see it as a long-term compounding story. Pullbacks, if and when they happen, can be opportunities to add, as long as the thesis hasn’t fundamentally changed.

What do you think? Does the 800G transition make Celestica a long-term compounder or are we near peak hype?

Really solid breakdown on the 800G transition. I think your point about the home networking analogy is spot on, bottlenecks are bottlenecks whethr its 1G at home or 400G in a datacener. The Oracle/OpenAI/Nvidia triangle is fascinating too, though you're right to be cautious about the circular dependencies. What strikes me most is how Celestica's already locking in 1.6T programs before 800G is even fully deployed. That early positioning could be huge if they can maintain those design win relationships. The PEG ratio argument makes sense given the growth rates, but I do wonder if margin pressure becomes an issue once the 800G wave matures and competition catches up. Overall though, compelling case for holding through volatility.